반응형

Child Mind Institute — Problematic Internet Use

Relating Physical Activity to Problematic Internet Use

www.kaggle.com

About the Competition

- The aim of this competition is to develop a model that predicts problematic internet usage levels based on physical activity and health data from children and adolescents.

- Since the current method of measuring problematic internet use requires complex expert evaluation, the goal is to identify it through easily obtainable physical activity indicators instead.

- This competition is hosted by the Child Mind Institute and sponsored by Dell Technologies and NVIDIA, with a total prize pool of $60,000. The evaluation metric used is quadratic weighted kappa.

Shake-Up?

What competition organizer says:

- Many participants adjusted model hyperparameters, thresholds, and random seeds to improve public leaderboard scores

- Submission analysis:

- Top 10 teams on public: Average 212 submissions (median 199)

- Top 10 teams on private: Average 64 submissions (median 25)

- This suggests attempts to artificially inflate scores on the public leaderboard

- Overfitting almost certainly occurred

Striking differences between missing values proportion in train vs test data

- Actual discussion here: Link

- The missing percentage of series parquet in test: 80-85%(~60% in train)

The missing percentage of FGC features in test: 70-75%(29.8% in train)

The missing percentage of BIA features in test: 60-65%(33.7% in train)

The missing percentage of PreInt_EduHx-computerinternet_hoursday in test: 40-50%(3% in train) - Might be the reason why KNNImputer works so well on the private test set despite decreasing the CV on the train set 😐 - both with leakage and without leakage (much worse)

9th Place Sol: This is not a lottery compettions (LB Rank 9. Best notebook Private score:0.493 )

- Why did we observe such dramatic drops in the Leaderboard rankings?

- Data leakage from the hidden dataset

- During the competition, he mentioned about "Private dataset leakage.. Cv and PL dont have any corelation."

- "in my experience, the score above 0.47 only data leakage."

- KNNImputer process in the shared notebooks contained a bug

- I believe this is why everyone who made their final submission based on the shared notebook fell significantly in the rankings.

- I believe this is why everyone who made their final submission based on the shared notebook fell significantly in the rankings.

- Data leakage from the hidden dataset

- What didn't work:

- Treating the SII as a classification problem instead of a regression problem.

- Predicting PCIA-Total.

- Improving the SII value through post-processing.

- Testing various methods and optimizing threshold values for the Kappa metric.

- Calculating the SII value separately for datasets with and without accelerometer data.

- What worked:

- I followed a phased approach for missing data:

a. Used all data (including missing SII's).

b. Combined train and test datasets to train the model.

c. Predicted missing values separately for train and test datasets.

d. Dropped rows with missing data for SII or PCIA1–PCIA19.

e. Removed columns with a very high proportion of missing values.

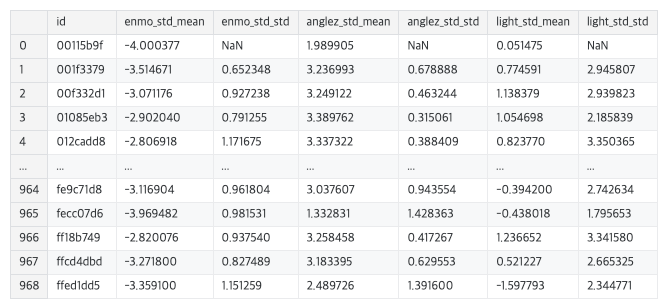

f. For feature engineering, I computed mean, standard deviation, kurtosis, and skew values using a

windowing method for both accelerometer data and columns within the same category.

g. Conducted feature selection for an LGBM model, reducing the features from 200–300 to 50–60.

h- Used voting and stacking ensemble techniques with LGBM (GBDT, GOSS, and DART) and CatBoost

models.

i- For the final results, I selected the most common label.- Using the Windowing method:

- Data is divided into fixed-size intervals (windows)

- Statistical values were calculated for each interval

- Calculated statistical values:

- Mean: Central tendency of the data

- Standard deviation: Measure of data dispersion

- Kurtosis: Measure of how peaked/flat the data distribution is

- Skew: Measure of data distribution asymmetry

- Mean: Central tendency of the data

- This calculation was applied to two types of data:

- accelerometer data

- columns within the same category

- accelerometer data

- Using the Windowing method:

- I followed a phased approach for missing data:

- Highest Private Leaderboard Score: 0.493

- Unlike my selected solution, I used the PCIA7 value from the train dataset as it was.

- For the test set, I used the predictions made by the model.

- Why did I do this?

- Because after predicting all PCIA values, I observed that the Kappa score for PCIA7 was

significantly higher than for the others. - For this reason, I decided to proceed with this approach.

- Because after predicting all PCIA values, I observed that the Kappa score for PCIA7 was

- However, I didn’t include this in my final submission because I noticed this three days before the deadline. -

- The two notebooks I tested showed little difference, with private leaderboard scores of 0.482 and 0.485.

- I had set a CV threshold of 0.450, so I chose not to submit these.

- Among the three notebooks I didn’t submit, one had a CV score of 0.451 and a private leaderboard score of 0.493.

- Conclusion: This competition was absolutely not a matter of luck for me.

16th Place Solution

- Code: Link

- Main points:

- imputation of missing values with IterativeImputer

- feature engineering from parquet files

- LightGBM training with custom QWK objective and metric

- performing 10 x 10 nested cross-validation to get reliable validation scores and stable test predictions

- performing threshold optimization only once using the overall predictions from the nested cross-validation. (GRID SEARCH)

- Discussion question #1 about imputation: What kind of reasoning led to filling in the missing values? Some may argue that the fact that the data is missing itself is valuable information and should not be filled in. Especially since LightGBM can train without handling missing values.

- Author's answer: Indeed, as you mentioned, I don't have a clear perspective on the reason of the effectiveness of missing value imputation either. However, I think that when the missing feature is strongly correlated with the target (in this competition, for instance, PreInt_EduHx-computerinternet_hoursday), it might have been better to impute the missing values rather than indirectly predicting the target from other features.

- Discussion question #2 about number of folds: I think large numbers of folds may lead overfit to validation data especially in small data, but does the nested CV prevent this ? Why do you choose 10folds?

- Author's answer: Yes. Since no optimization was performed on the test data for each fold, I believe there is no risk of overfitting by increasing the number of folds.

10th Solution

- Used only 5 features with hierarchical bayes model

- code: https://www.kaggle.com/code/junpeimorioka/10th-place-5-features-hierarchical-bayes

Final Conclusion

- So, most of the rankers realized that the CV-LB relationship was weak in this competition.

- Indicating that we should stick on the CV score.

- 1. threshold optimization leading to unstable results

- 2. Using simpler model due to unstable lb-cv score

- 3. Data leakage problem

- 4. SII vs. PCIAT total as target label

- 5. Using mean, std, ... values instead of autoencoder(led to lower cv score)

- 6. various imputations

- 7. more common to get rid of optimizations for simpler model

My Solution

- It was my first competition but I knew the problem of the CV-LB score in this comp.

- However, I wasn't able to establish stable standard of CV just as the high solutions did.

- I also extensively took the idea of high LB score solutions into account: for example, autoencoder and tabnet.

- However I found out simpler model with both of them can score up to 0.439 among my solutions which is a bronze medal score.

Persistence is very important. You should not give up unless you are forced to give up.

- Elon Musk -

반응형