Nineth competition following Youhan Lee's curriculum. Regression competition using tabular data.

Zillow Prize: Zillow’s Home Value Prediction (Zestimate)

Can you improve the algorithm that changed the world of real estate?

www.kaggle.com

First Kernel: Simple Exploration Notebook - Zillow Prize

- EDA kernel focused on univariate correlation analysis.

Insight / Summary:

1. Removing outliers

ulimit = np.percentile(train_df.logerror.values, 99)

llimit = np.percentile(train_df.logerror.values, 1)

train_df['logerror'].ix[train_df['logerror']>ulimit] = ulimit

train_df['logerror'].ix[train_df['logerror']<llimit] = llimit

Second Kernel: Simple XGBoost Starter (~0.0655)

- Literally simple baseline kernel using xgboost.

Insight / Summary:

1. XGBoost Dmatrix

d_train = xgb.DMatrix(x_train, label=y_train)

d_valid = xgb.DMatrix(x_valid, label=y_valid)- DMatrix is a special data structure used in XGBoost.

- It's an object that converts regular numpy arrays or pandas DataFrames into a format that XGBoost can process efficiently.

- The main reasons for using DMatrix are:

- Memory efficiency: Stores data in an optimized format to save memory

- Training speed: Prepares data in an optimized format so XGBoost can train quickly

- Sparse matrix support: Can efficiently handle data when it's sparse

Third Kernel: Zillow EDA On Missing Values & Multicollinearity

- EDA focused on missing values and multicollinearity.

- Missing Value Analysis

- Correlation Analysis

- Top Contributing Features (Through XGBoost)

- Correlation Analysis

- Multicollinearity Analysis

- Univariate Analysis

- Bivariate AnalysisInsight / Summary:

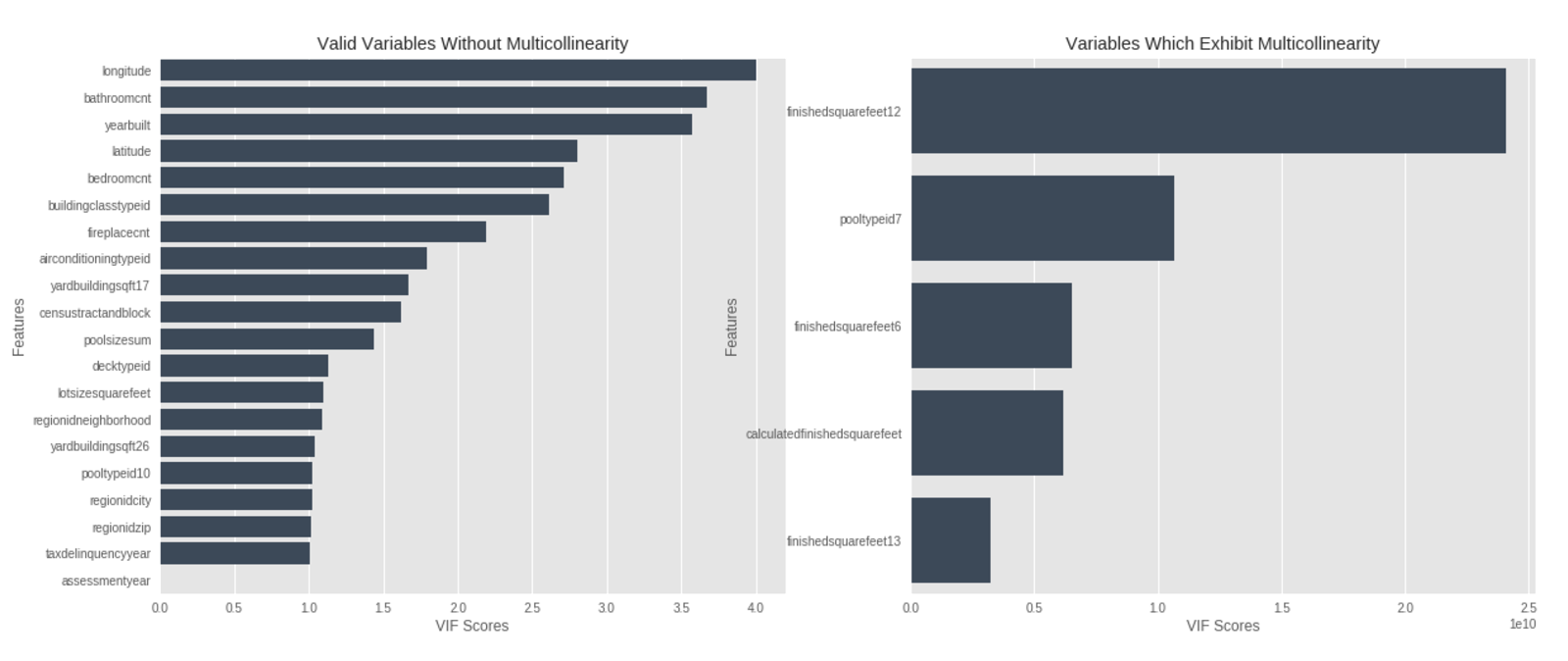

1. Multicollinearity Analysis

# Import function for calculating VIF (Variance Inflation Factor)

from statsmodels.stats.outliers_influence import variance_inflation_factor

# Hide warning messages

import warnings

warnings.filterwarnings("ignore")

# Define function for calculating VIF

def calculate_vif_(X):

variables = list(X.columns)

# Calculate VIF scores for each variable and return as dictionary

vif = {variable:variance_inflation_factor(exog=X.values, exog_idx=ix)

for ix,variable in enumerate(list(X.columns))}

return vif

# Select numerical columns only

numericalCol = []

for f in merged.columns:

# Select columns that are not object type and exclude specific columns (parcelid, transactiondate, logerror)

if merged[f].dtype!='object' and f not in ["parcelid", "transactiondate", "logerror"]:

numericalCol.append(f)

# Create dataframe with missing values filled with -999

mergedFilterd = merged[numericalCol].fillna(-999)

# Calculate VIF scores

vifDict = calculate_vif_(mergedFilterd)

# Convert VIF results to dataframe

vifDf = pd.DataFrame()

vifDf['variables'] = vifDict.keys()

vifDf['vifScore'] = vifDict.values()

# Sort by VIF score in descending order

vifDf.sort_values(by=['vifScore'],ascending=False,inplace=True)

# Variables with VIF score ≤ 5 (no multicollinearity)

validVariables = vifDf[vifDf["vifScore"]<=5]

# Variables with VIF score > 5 (with multicollinearity)

variablesWithMC = vifDf[vifDf["vifScore"]>5]

# Create subplots for visualization

fig,(ax1,ax2) = plt.subplots(ncols=2)

fig.set_size_inches(20,8)

# Visualize VIF scores for variables without multicollinearity

sn.barplot(data=validVariables,x="vifScore",y="variables",ax=ax1,orient="h",color="#34495e")

# Visualize VIF scores for top 5 variables with multicollinearity

sn.barplot(data=variablesWithMC.head(5),x="vifScore",y="variables",ax=ax2,orient="h",color="#34495e")

# Set graph titles and labels

ax1.set(xlabel='VIF Scores', ylabel='Features',title="Valid Variables Without Multicollinearity")

ax2.set(xlabel='VIF Scores', ylabel='Features',title="Variables Which Exhibit Multicollinearity")

Overall explanation: This code demonstrates the process of analyzing multicollinearity between features in the dataset. Multicollinearity refers to strong correlations between independent variables, which can degrade model performance.

Main steps:

- Selects only numerical variables for analysis

- Calculates VIF (Variance Inflation Factor) scores, which measure multicollinearity

- Generally, VIF scores above 5 or 10 indicate multicollinearity; this code uses 5 as the threshold

- Visualizes results in two graphs:

- Left graph: Variables without multicollinearity (VIF ≤ 5)

- Right graph: Top 5 variables with multicollinearity (VIF > 5)

This analysis helps identify which variables have strong correlations with each other, which is valuable information for preprocessing steps like feature selection or dimensionality reduction.

Fourth Kernel: XGBoost, LightGBM, and OLS and NN

- Kernel ensembling various prediction methods: XGBoost, LightGBM, OLS(Linear Regression), and NN.

Insight / Summary:

1. Summary

- LightGBM Model

- Data preprocessing

- Set LightGBM parameters

- Train model and make predictions

- XGBoost Model

- Data reprocessing (different from LightGBM)

- Remove outliers

- Train two different XGBoost models

- Combine predictions from both models

- Neural Network Model

- Data preprocessing (standardization, handling missing values)

- Network structure:

- 4 hidden layers (400 → 160 → 64 → 26 units)

- PReLU activation function

- Use Dropout and BatchNormalization

- Train model and make predictions

- OLS Model: OLS(Ordinary Least Squares) is the basic form of linear regression

- Feature engineering

- Train LinearRegression

- Make predictions for multiple dates

- Final Prediction Combination

- Combine predictions from each model using weights

- Apply FUDGE_FACTOR for final adjustment

- Save results to CSV file

2. FUDGE_FACTOR

pred = FUDGE_FACTOR * (OLS_WEIGHT*reg.predict(get_features(test)) + (1-OLS_WEIGHT)*pred0)- This coefficient is applied after combining predictions from all models and increases the final prediction value by 12% (1.12 times).

- It is used for the following purposes:

- Prediction Bias Correction: To correct when models tend to systematically underpredict

- Calibration: To adjust predictions based on validation set or previous submission results

- Systematic Error Correction: To correct systematic errors due to data characteristics or model limitations

- This is an empirically determined value, and the optimal value was likely found through validation dataset or leaderboard performance.

Success is not about luck, but about hard work, dedication, and sacrifice.

- Max Holloway -

'캐글' 카테고리의 다른 글

| [Kaggle Study] #12 Spooky Author Identification (0) | 2024.12.04 |

|---|---|

| [Kaggle Study] #11 Credit Card Fraud Detection (1) | 2024.12.03 |

| [Kaggle Study] #9 New York City Taxi Trip Duration (0) | 2024.11.29 |

| [Kaggle Study] #8 2018 Data Science Bowl (0) | 2024.11.28 |

| [Kaggle Study] #6 Costa Rican Household Poverty Level Prediction (1) | 2024.11.28 |