Tree-based Ensemble Models?

- LightGBM, XGBoost, CatBoost, Random Forest are all tree-based ensemble models.

- These algorithms are popular on Kaggle because of their high prediction performance, fast learning speed, and ability to handle various data types.

- They show particularly powerful performance in analyzing structured data.

- "Tree-based": These algorithms all use decision trees as their basic building blocks.

- "Ensemble": They create more powerful prediction models by combining multiple trees.

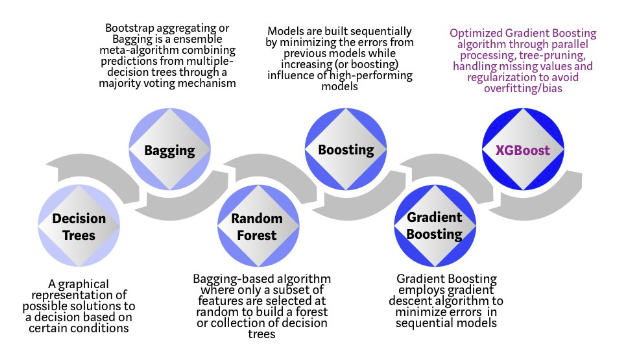

Types of Ensemble Models

- You can find details about ensemble models from my previous post below.

- However, in summary, Bagging is a method that predicts the final result by aggregating prediction results from multiple decision trees.

- Boosting is a method that sequentially influences subsequent models by applying weights to the predictions of previous models.

- Therefore, while Boosting has higher accuracy compared to Bagging, it is slower and can be prone to overfitting.

- Boosting is suitable when you need to improve the low performance of individual decision trees, while Bagging is more appropriate when overfitting is a concern.

- We should know the types of ensemble models to understand the tree-based ensemble models.

[Kaggle Extra Study] 6. Ensemble Method 앙상블 기법

What is Ensemble method?In real-world problems, it is obvious that we can end up in better conclusions if we ask for others' opinion and get advice. It works the same with making machine learning models: if we take a look at the results of various solution

dongsunseng.com

Random Forest

- Random Forest is a model based on the Decision Tree model.

- Decision Tree is a model that classifies data by binary classification(Yes/No) according to specific criteria, categorizing by features.

- The problem with Decision Tree is that when classifying using all features, overfitting often occurs.

- Random Forest was developed to address this issue.

- Random Forest is a model that uses bagging ensemble of multiple decision trees.

- If there are 100 features in total, using all 100 could lead to overfitting.

- So, instead, it creates a forest by making multiple Decision Trees using only about 10~20 selected features.

Gradient Boosting Algorithms

GBM(Gradient Boosting Machine)

- Gradient Descent: a method to find optimal parameters that minimize the Loss function.

- Gradient Boosting can be thought of as this optimization process occurring at the model level rather than the parameter level.

- In other words, the Gradient reveals the weaknesses of the currently trained model, and other models focus on complementing these weaknesses to boost performance.

- However, Gradient Boosting had issues with slow speed and potential overfitting.

- XGBoost was created to address these problems.

1. XGBoost

- XGBoost is also an algorithm that ensembles multiple decision trees.

- However, unlike Random Forest, XGBoost is implemented as a boosting ensemble model.

- The Boosting method is an ensemble technique that focuses on reducing the error of a single prediction model.

- For example, when there is a first decision tree and its prediction model, this method works by creating another decision tree to reduce the error value, and then continuing to reduce the error in the same way.

- It is an optimized Gradient Boosting algorithm which is enabled by parallel processing.

- While XGBoost is based on traditional Gradient Boost as it uses gradient descent for weight assignment (a characteristic of ensemble boosting), it is faster than GBM (Gradient Boosting Machine) and includes regularization features like early stopping to prevent overfitting.

- Additionally, being CART-based, it can handle both classification and regression tasks.

2. LightGBM

- LightGBM, like XGBoost, is a model based on the GBM model.

- While XGBoost has faster processing speed than GBM due to parallel processing support, it still has low learning speed.

- Additionally, with its many hyperparameters, using methods like grid search for hyperparameter tuning makes the process even more time-consuming.

- LightGBM was developed to improve the learning speed.

- While XGBoost has faster processing speed than GBM due to parallel processing support, it still has low learning speed.

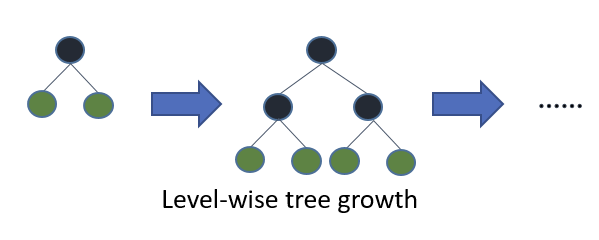

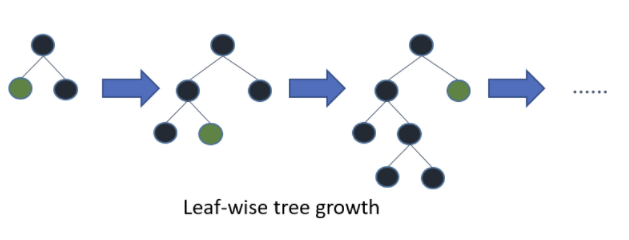

- The key difference in LightGBM is that when creating trees during training, it doesn't use the Level-wise method but instead uses the Leaf-wise method to build trees.

- We call this GOSS(Gradient-based One-Side Sampling).

- When calculating Information gain, GOSS amplifies data by applying a multiplier constant to instances with small gradients (small weights).

- By doing this, it can better focus on less trained instances without significantly changing the data distribution.

- For example, while conventional decision tree creates branches one layer at a time before moving to the next level, LightGBM builds its decision trees by creating branches from leaves without layer restrictions, continuously branching out from existing leaves.

- Level-wise tree analysis requires balancing, which reduces tree depth and adds computations.

- While LightGBM creates asymmetrical and deep trees by continuously splitting leaf nodes in ways that maximize loss reduction regardless of balance, it can achieve greater loss reduction than level-wise approaches when creating the same leaves.

- This process results in lower memory usage, handle large-volume data, faster model creation, and higher performance compared to other boosting algorithms.

- Additionally, with parallel processing support, it achieves high performance while learning at very fast speeds.

- We call this GOSS(Gradient-based One-Side Sampling).

- However, the disadvantage of this Leaf-wise approach is that it's vulnerable to overfitting.

- Therefore, when dealing with small datasets(about 10,000 rows) where overfitting might occur, XGBoost is recommended over LightGBM.

3. CatBoost (Categorical Boosting)

- CatBoost is a model created to solve the overfitting problem of LightGBM and is optimized for predicting categorical variables.

- "It was developed to address the target leakage problem and categorical variable processing issues present in existing GBM-based algorithms through Order principle and new categorical variable processing methods."

- More into problems:

- Categorical variables have discrete values that cannot be compared with each other, which is why one-hot encoding was traditionally used as a solution.

- One-hot encoding method can dramatically increase the number of variables.

- To solve this problem, one can either group categories into clusters before one-hot encoding or use Target Statistics(TS) estimation methods.

- This contrasts with traditional LightGBM, which consumed significant computation time and memory by transforming categorical variables using gradient statistics in every boosting round.

- Greedy TS estimates using the mean y-value of each categorical feature, but this can lead to target leakage and risks overfitting.

- This encodes categorical independent variables using the mean of the dependent variable.

- This creates more correlation with the data compared to conventional 0,1 encoding.

- Hold-out TS improves this by splitting the training data - using one dataset for TS estimation and another for training, thus reducing overfitting. However, this method has the disadvantage of reducing the amount of training data.

- Therefore, CatBoost proposes Ordered TS, which applies the ordering principle.

- Ordered TS only calculates TS estimates from observed history.

- It introduces random permutations(artificial time) to estimate TS using only historical data from the current point.

- Since using a single random permutation for TS estimation would result in higher variance in earlier TS esimates compared to later ones, different random permutations are used at each step.

- Also called "Ordered Target Encoding" or "Ordered Mean Encoding".

- Greedy TS estimates using the mean y-value of each categorical feature, but this can lead to target leakage and risks overfitting.

- Additionally, CatBoost proposes the ordering principle as a solution for overfitting caused by Target leakage and Prediction Shift.

- Called "Ordered Boosting method".

- Overfitting occurs because the same data is used to calculate residuals and train in each boosting round.

- To solve this, random permutations are used, similar to ordered TS.

- To calculate residuals for the i-th sample, the model uses data trained up to the (i-1)th sample.

- In other words, rather than updating residuals using a model trained repeatedly on the same data, it updates them using models trained on different data.

- Ordered Boosting creates models based on partial errors and gradually increases the data.

- Other methodologies like oblivious decision trees and feature combination are also used.

- Oblivious Decision Trees

- Apply the same splitting criteria across the entire tree level when dividing trees.

- This can create balanced trees and prevent overfitting.

- Categorical Feature Combinations

- Literally means creating new variable combinations from categorical variables.

- Automatically combines related categorical variables to reduce the number of variables.

- It uses a greedy approach to create these combinations, finding combinations from previously used data when splitting trees and converting them to TS.

- Literally means creating new variable combinations from categorical variables.

- Oblivious Decision Trees

- Level-wise model creation.

- While it improves accuracy and speed through specific encoding methods for categorical variables, CatBoost has the disadvantage of significantly slower performance when dealing with many continuous variables.

- In addition to that, when data is mostly numerical, these algorithms may not be very effective.

- Also, the learning speed is slower compared to LightGBM.

- However, it's rare to see large dataframes without categorical features.

- While handling categorical features has always been a major issue in feature engineering, CatBoost has a significant advantage in this area.

- Therefore, when using CatBoost, there is often a lot of effort put into converting variables into categorical forms.

Parameters

Reference

XGBoost Algorithm: Long May She Reign!

The new queen of Machine Learning algorithms taking over the world…

towardsdatascience.com

https://www.kdnuggets.com/2018/03/catboost-vs-light-gbm-vs-xgboost.html

XGBoost, LightGBM, CatBoost 정리 및 비교

도입 Kaggle을 비롯한 데이터 경진대회 플랫폼에서 항상 상위권을 차지하는 알고리즘 XGBoost, LightGBM, CatBoost에 대해 정리하고 차이점을 비교해보고자 합니다. 세 알고리즘은 모두 Gradient Boosting기반

statinknu.tistory.com

Excellence is not a skill. It’s an attitude.

- Conor Mcgregor -

'캐글 보충' 카테고리의 다른 글

| [Kaggle Extra Study] 16. Handling Categorical Variables (2) | 2024.11.11 |

|---|---|

| [Kaggle Extra Study] 15. GBM vs. XGBoost (0) | 2024.11.10 |

| [Kaggle Extra Study] 13. Weight Initialization (3) | 2024.11.09 |

| [Kaggle Extra Study] 12. Drop-out (4) | 2024.11.07 |

| [Kaggle Extra Study] 11. Polars (8) | 2024.11.06 |