Thirteenth competition following Youhan Lee's curriculum. Natural Language Processing competition.

First Kernel: [For Beginners] Tackling Toxic Using Keras

- Kernel using keras LSTM.

Insight / Summary:

1. Checking null values

train.isnull().any(),test.isnull().any()

2. Tokenization

list_tokenized_train = tokenizer.texts_to_sequences(list_sentences_train)

list_tokenized_test = tokenizer.texts_to_sequences(list_sentences_test)Keras has turned our words into index representation for us:

[[688,

75,

1,

126,

130,

177,

29,

672,

4511,

12052,

1116,

...

]]

3. We have to feed a stream of data that has a consistent length(fixed number of features) -> Padding

- We could make the shorter sentences as long as the others by filling the shortfall by zeros.

- But on the other hand, we also have to trim the longer ones to the same length(maxlen) as the short ones.

- In this case, we have set the max length to be 200.

maxlen = 200

X_t = pad_sequences(list_tokenized_train, maxlen=maxlen)

X_te = pad_sequences(list_tokenized_test, maxlen=maxlen)- How do you know what is the best "maxlen" to set?

- If you put it too short, you might lose some useful feature that could cost you some accuracy points down the path.

- If you put it too long, your LSTM cell will have to be larger to store the possible values or states.

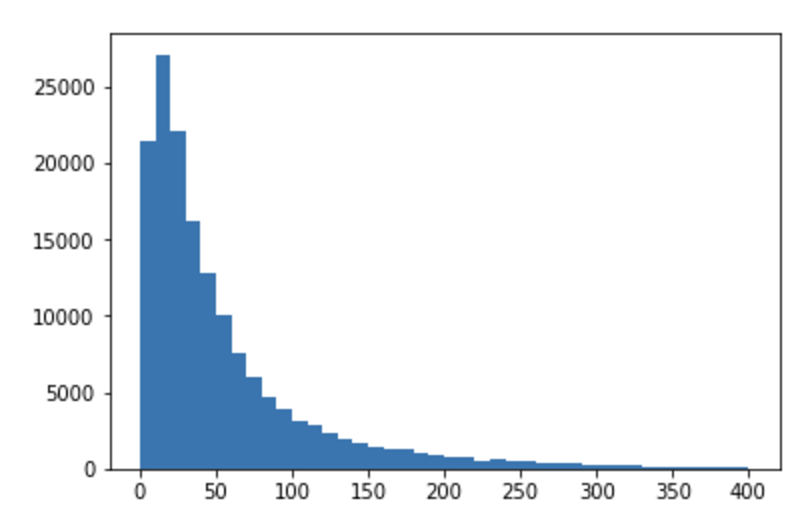

- One of the ways to go about it is to see the distribution of the number of words in sentences.

totalNumWords = [len(one_comment) for one_comment in list_tokenized_train]plt.hist(totalNumWords,bins = np.arange(0,410,10))#[0,50,100,150,200,250,300,350,400])#,450,500,550,600,650,700,750,800,850,900])

plt.show()

- As we can see, most of the sentence length is about 30+.

- We could set the "maxlen" to about 50, but I'm being paranoid so I have set to 200.

- Then again, it sounds like something you could experiment and see what is the magic number.

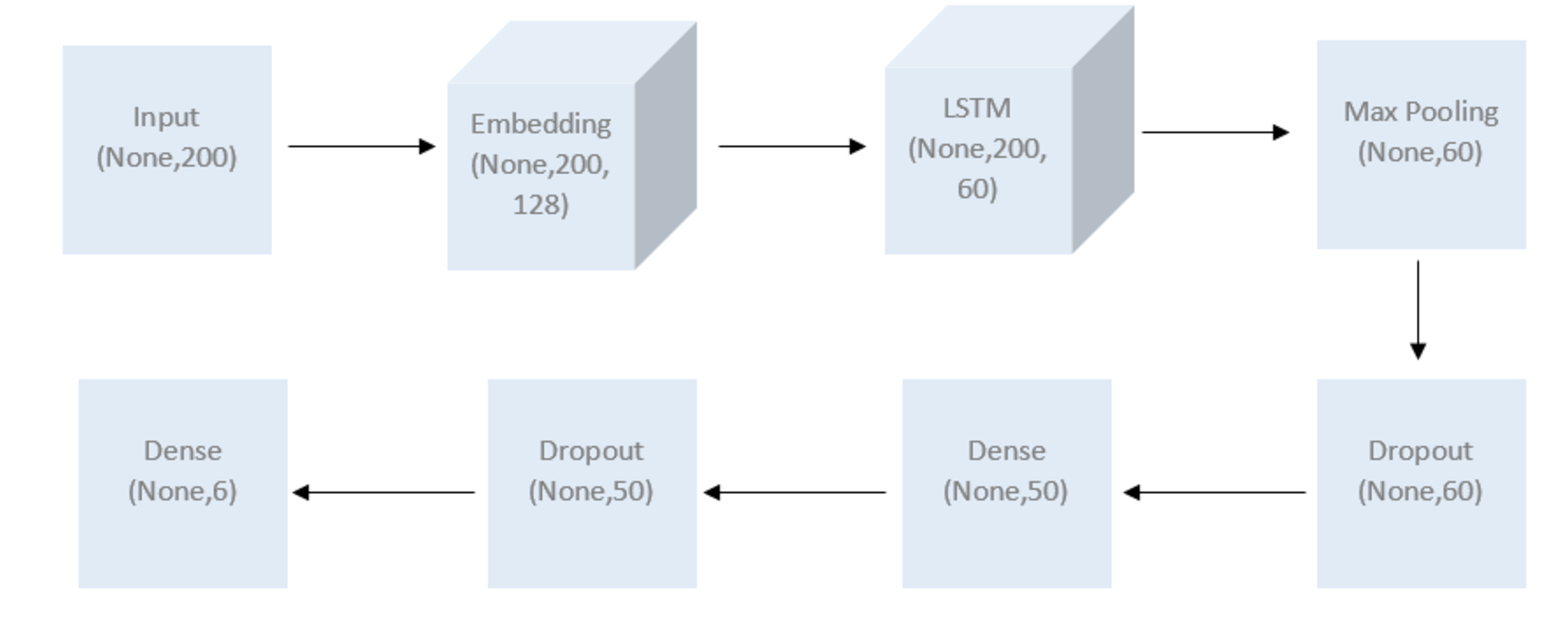

4. LSTM Modeling Details

- Before we could pass the output to a normal layer, we need to reshape the 3D tensor into a 2D one.

- We reshape carefully to avoid throwing away data that is important to us, and ideally we want the resulting data to be a good representative of the original data.

- Therefore, we use a Global Max Pooling layer which is traditionally used in CNN problems to reduce the dimensionality of image data. In simple terms, we go through each patch of data, and we take the maximum values of each patch.

- These collection of maximum values will be a new set of down-sized data we can use.

5. Additional tips and tricks

1) If you have hit some roadblocks, especially when it starts returning dimension related errors, a good idea is to run "model.summary()" because it lists out all your layer outputs, which is pretty useful for diagnosis.

model.summary()

2) While adding more layers, and doing more fancy transformations, it's a good idea to check if the outputs are performing as you have expected. You can reveal the output of a particular layer by:

from keras import backend as K

# with a Sequential model

get_3rd_layer_output = K.function([model.layers[0].input],

[model.layers[2].output])

layer_output = get_3rd_layer_output([X_t[:1]])[0]

layer_output.shape

# print layer_output to see the actual data

# result: (1, 200, 60)Second Kernel: Stop the S@#$ - Toxic Comments EDA

- EDA Kernel.

Insight / Summary:

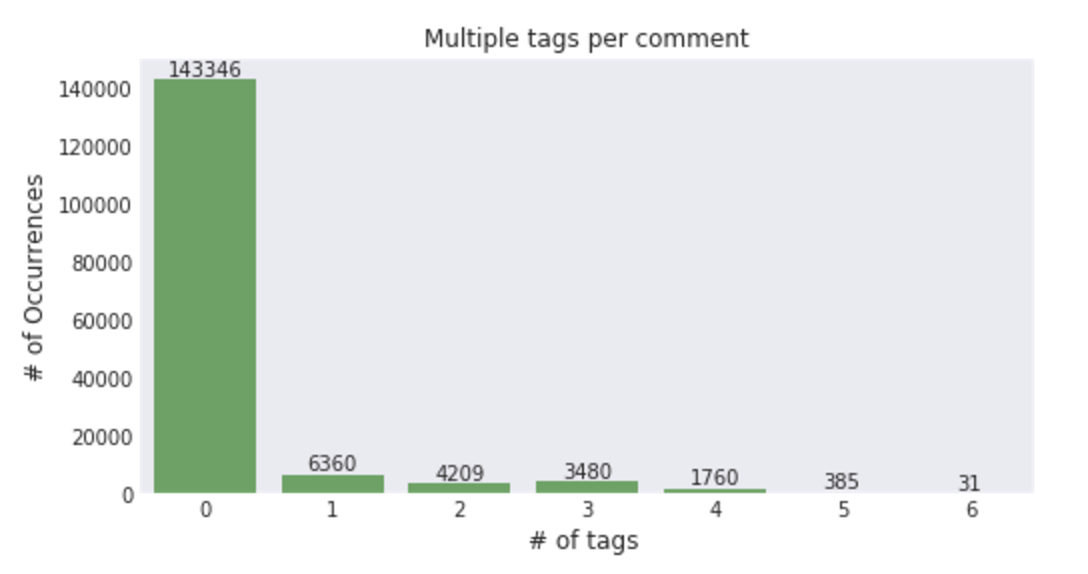

1. Multi-tagging

- There are ~95k comments in the training dataset and there are ~21 k tags and ~86k clean comments

- This is only possible when multiple tags are associated with each comment (eg) a comment can be classified as both toxic and obscene.

x=rowsums.value_counts()

#plot

plt.figure(figsize=(8,4))

ax = sns.barplot(x.index, x.values, alpha=0.8,color=color[2])

plt.title("Multiple tags per comment")

plt.ylabel('# of Occurrences', fontsize=12)

plt.xlabel('# of tags ', fontsize=12)

#adding the text labels

rects = ax.patches

labels = x.values

for rect, label in zip(rects, labels):

height = rect.get_height()

ax.text(rect.get_x() + rect.get_width()/2, height + 5, label, ha='center', va='bottom')

plt.show()

2. Feature Engineering

1) Direct features: Features which are a directly due to words/content.We would be exploring the following techniques

- Word frequency features

- Count features

- Bigrams

- Trigrams

- Vector distance mapping of words (Eg: Word2Vec)

- Sentiment scores

2) Indirect features: Some more experimental features.

- count of sentences

- count of words

- count of unique words

- count of letters

- count of punctuations

- count of uppercase words/letters

- count of stop words

- Avg length of each word

3) Leaky features:

From the example, we know that the comments contain identifier information (eg: IP, username,etc.). We can create features out of them but, it will certainly lead to overfitting to this specific Wikipedia use-case.

- toxic IP scores

- toxic users

Note: Creating the indirect and leaky features first. There are two reasons for this:

- Count features(Direct features) are useful only if they are created from a clean corpus

- Also the indirect features help compensate for the loss of information when cleaning the dataset

3. Indirect features

## Indirect features

#Sentense count in each comment:

# '\n' can be used to count the number of sentences in each comment

df['count_sent']=df["comment_text"].apply(lambda x: len(re.findall("\n",str(x)))+1)

#Word count in each comment:

df['count_word']=df["comment_text"].apply(lambda x: len(str(x).split()))

#Unique word count

df['count_unique_word']=df["comment_text"].apply(lambda x: len(set(str(x).split())))

#Letter count

df['count_letters']=df["comment_text"].apply(lambda x: len(str(x)))

#punctuation count

df["count_punctuations"] =df["comment_text"].apply(lambda x: len([c for c in str(x) if c in string.punctuation]))

#upper case words count

df["count_words_upper"] = df["comment_text"].apply(lambda x: len([w for w in str(x).split() if w.isupper()]))

#title case words count

df["count_words_title"] = df["comment_text"].apply(lambda x: len([w for w in str(x).split() if w.istitle()]))

#Number of stopwords

df["count_stopwords"] = df["comment_text"].apply(lambda x: len([w for w in str(x).lower().split() if w in eng_stopwords]))

#Average length of the words

df["mean_word_len"] = df["comment_text"].apply(lambda x: np.mean([len(w) for w in str(x).split()]))#derived features

#Word count percent in each comment:

df['word_unique_percent']=df['count_unique_word']*100/df['count_word']

#derived features

#Punct percent in each comment:

df['punct_percent']=df['count_punctuations']*100/df['count_word']

4. Leaky features

- Caution: Even though including these features might help us perform better in this particular scenario, it will not make sence to add them in the final model/general purpose model.

- Here we are creating our own custom count vectorizer to create count variables that match our regex condition.

#Leaky features

df['ip']=df["comment_text"].apply(lambda x: re.findall("\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}",str(x)))

#count of ip addresses

df['count_ip']=df["ip"].apply(lambda x: len(x))

#links

df['link']=df["comment_text"].apply(lambda x: re.findall("http://.*com",str(x)))

#count of links

df['count_links']=df["link"].apply(lambda x: len(x))

#article ids

df['article_id']=df["comment_text"].apply(lambda x: re.findall("\d:\d\d\s{0,5}$",str(x)))

df['article_id_flag']=df.article_id.apply(lambda x: len(x))

#username

## regex for Match anything with [[User: ---------- ]]

# regexp = re.compile("\[\[User:(.*)\|")

df['username']=df["comment_text"].apply(lambda x: re.findall("\[\[User(.*)\|",str(x)))

#count of username mentions

df['count_usernames']=df["username"].apply(lambda x: len(x))

#check if features are created

#df.username[df.count_usernames>0]

# Leaky Ip

cv = CountVectorizer()

count_feats_ip = cv.fit_transform(df["ip"].apply(lambda x : str(x)))

# Leaky usernames

cv = CountVectorizer()

count_feats_user = cv.fit_transform(df["username"].apply(lambda x : str(x)))

5. Direct Features

1) Count based features(for unigrams):

- Lets create some features based on frequency distribution of the words.

- Initially lets consider taking words one at a time (ie) Unigrams

- Python's SKlearn provides 3 ways of creating count features.

- All three of them first create a vocabulary(dictionary) of words and then create a sparse matrix of word counts for the words in the sentence that are present in the dictionary.

- A brief description of them:

- CountVectorizer

- Creates a matrix with frequency counts of each word in the text corpus

- TF-IDF Vectorizer

- TF - Term Frequency -- Count of the words(Terms) in the text corpus (same of Count Vect)

- IDF - Inverse Document Frequency -- Penalizes words that are too frequent. We can think of this as regularization

- HashingVectorizer

- Creates a hashmap(word to number mapping based on hashing technique) instead of a dictionary for vocabulary

- This enables it to be more scalable and faster for larger text coprus

- Can be parallelized across multiple threads

- Using TF-IDF here.

- Note: Using the concatenated dataframe "merge" which contains both text from train and test dataset to ensure that the vocabulary that we create does not missout on the words that are unique to testset.

- CountVectorizer

### Unigrams -- TF-IDF

# using settings recommended here for TF-IDF -- https://www.kaggle.com/abhishek/approaching-almost-any-nlp-problem-on-kaggle

#some detailed description of the parameters

# min_df=10 --- ignore terms that appear lesser than 10 times

# max_features=None --- Create as many words as present in the text corpus

# changing max_features to 10k for memmory issues

# analyzer='word' --- Create features from words (alternatively char can also be used)

# ngram_range=(1,1) --- Use only one word at a time (unigrams)

# strip_accents='unicode' -- removes accents

# use_idf=1,smooth_idf=1 --- enable IDF

# sublinear_tf=1 --- Apply sublinear tf scaling, i.e. replace tf with 1 + log(tf)

#temp settings to min=200 to facilitate top features section to run in kernals

#change back to min=10 to get better results

start_unigrams=time.time()

tfv = TfidfVectorizer(min_df=200, max_features=10000,

strip_accents='unicode', analyzer='word',ngram_range=(1,1),

use_idf=1,smooth_idf=1,sublinear_tf=1,

stop_words = 'english')

tfv.fit(clean_corpus)

features = np.array(tfv.get_feature_names())

train_unigrams = tfv.transform(clean_corpus.iloc[:train.shape[0]])

test_unigrams = tfv.transform(clean_corpus.iloc[train.shape[0]:])#https://buhrmann.github.io/tfidf-analysis.html

def top_tfidf_feats(row, features, top_n=25):

''' Get top n tfidf values in row and return them with their corresponding feature names.'''

topn_ids = np.argsort(row)[::-1][:top_n]

top_feats = [(features[i], row[i]) for i in topn_ids]

df = pd.DataFrame(top_feats)

df.columns = ['feature', 'tfidf']

return df

def top_feats_in_doc(Xtr, features, row_id, top_n=25):

''' Top tfidf features in specific document (matrix row) '''

row = np.squeeze(Xtr[row_id].toarray())

return top_tfidf_feats(row, features, top_n)

def top_mean_feats(Xtr, features, grp_ids, min_tfidf=0.1, top_n=25):

''' Return the top n features that on average are most important amongst documents in rows

indentified by indices in grp_ids. '''

D = Xtr[grp_ids].toarray()

D[D < min_tfidf] = 0

tfidf_means = np.mean(D, axis=0)

return top_tfidf_feats(tfidf_means, features, top_n)

# modified for multilabel milticlass

def top_feats_by_class(Xtr, features, min_tfidf=0.1, top_n=20):

''' Return a list of dfs, where each df holds top_n features and their mean tfidf value

calculated across documents with the same class label. '''

dfs = []

cols=train_tags.columns

for col in cols:

ids = train_tags.index[train_tags[col]==1]

feats_df = top_mean_feats(Xtr, features, ids, min_tfidf=min_tfidf, top_n=top_n)

feats_df.label = label

dfs.append(feats_df)

return dfs#get top n for unigrams

tfidf_top_n_per_lass=top_feats_by_class(train_unigrams,features)

end_unigrams=time.time()

print("total time in unigrams",end_unigrams-start_unigrams)

print("total time till unigrams",end_unigrams-start_time)

# result: total time in unigrams 85.26099634170532

# total time till unigrams 366.4286904335022Third Kernel: Logistic regression with words and char n-grams

- Literally kernel using logistic regression for modeling with both words features and char features.

Insight / Summary:

1. Summary

- This code implements a machine learning model for classifying toxic comments.

- Here are its key features and operational methods:

- Data Processing Approach:

- Analyzes comment text at two levels (word and character)

- Word-level analysis captures individual word meanings

- Character-level analysis can capture typos and special expressions

- Feature Extraction Method:

- Uses TF-IDF (Term Frequency-Inverse Document Frequency) vectorization

- Word features: Extracts up to 10,000 unigram features

- Character features: Extracts up to 50,000 features from 2-6 character sequences

- Combines both features to create rich text representation

- Modeling Approach:

- Creates separate binary classification models for each of 6 toxic categories

- Uses logistic regression to predict probabilities for each category

- Evaluates model performance using 3-fold cross-validation

- Measures performance using ROC-AUC score

- Optimization Considerations:

- Sets sublinear_tf=True to reduce impact of extreme frequency values

- Sets stop_words='english' to remove stop words

- Prevents overfitting through L2 regularization (C=0.1)

- Optimizes large-scale data processing using SAG (Stochastic Average Gradient) optimizer

- Data Processing Approach:

- This implementation demonstrates a practical approach to text classification, particularly effective for analyzing toxicity in comments from multiple angles.

2. Code Analysis

# Import required libraries

import numpy as np

import pandas as pd

# scikit-learn libraries for text processing and modeling

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import cross_val_score

from scipy.sparse import hstack

# Define toxic comment categories for classification

class_names = ['toxic', 'severe_toxic', 'obscene', 'threat', 'insult', 'identity_hate']

# Load data and handle missing values with empty spaces

train = pd.read_csv('../input/train.csv').fillna(' ')

test = pd.read_csv('../input/test.csv').fillna(' ')

# Extract comment text from training and test data

train_text = train['comment_text']

test_text = test['comment_text']

all_text = pd.concat([train_text, test_text])

# Word-level TF-IDF vectorization settings

word_vectorizer = TfidfVectorizer(

sublinear_tf=True, # Apply log scale to TF values

strip_accents='unicode', # Remove accents

analyzer='word', # Word-level analysis

token_pattern=r'\w{1,}', # Recognize one or more word characters as tokens

stop_words='english', # Remove English stop words

ngram_range=(1, 1), # Use single words only (unigram)

max_features=10000) # Use maximum of 10000 features

word_vectorizer.fit(all_text)

train_word_features = word_vectorizer.transform(train_text)

test_word_features = word_vectorizer.transform(test_text)

# Character-level TF-IDF vectorization settings

char_vectorizer = TfidfVectorizer(

sublinear_tf=True, # Apply log scale to TF values

strip_accents='unicode', # Remove accents

analyzer='char', # Character-level analysis

stop_words='english', # Remove English stop words

ngram_range=(2, 6), # Use 2-6 character sequences

max_features=50000) # Use maximum of 50000 features

char_vectorizer.fit(all_text)

train_char_features = char_vectorizer.transform(train_text)

test_char_features = char_vectorizer.transform(test_text)

# Horizontally combine word and character features

train_features = hstack([train_char_features, train_word_features])

test_features = hstack([test_char_features, test_word_features])

# Train models and make predictions for each toxic category

scores = []

submission = pd.DataFrame.from_dict({'id': test['id']})

for class_name in class_names:

# Extract target data for current category

train_target = train[class_name]

# Initialize logistic regression model (L2 regularization, SAG optimizer)

classifier = LogisticRegression(C=0.1, solver='sag')

# Calculate ROC-AUC score using 3-fold cross-validation

cv_score = np.mean(cross_val_score(classifier, train_features, train_target, cv=3, scoring='roc_auc'))

scores.append(cv_score)

print('CV score for class {} is {}'.format(class_name, cv_score))

# Train model on full training data and predict test data

classifier.fit(train_features, train_target)

submission[class_name] = classifier.predict_proba(test_features)[:, 1]

# Calculate average score across all categories

print('Total CV score is {}'.format(np.mean(scores)))

# Save predictions to CSV file

submission.to_csv('submission.csv', index=False)Fourth Kernel: Classifying multi-label comments (0.9741 lb)

- Also using logistic regression.

Insight / Summary:

1. Unlabelled data

- As the mean values are very small (some way below 0.05), there would be many not labelled as positive in the six categories.

- From this I guess that there would be many comments which are not labelled in any of the six categories.

unlabelled_in_all = train_df[(train_df['toxic']!=1) & (train_df['severe_toxic']!=1) & (train_df['obscene']!=1) &

(train_df['threat']!=1) & (train_df['insult']!=1) & (train_df['identity_hate']!=1)]

print('Percentage of unlabelled comments is ', len(unlabelled_in_all)/len(train_df)*100)

# result: Percentage of unlabelled comments is 89.83211235124176# Let's look at the character length for the rows in the training data and record these

train_df['char_length'] = train_df['comment_text'].apply(lambda x: len(str(x)))# look at the histogram plot for text length

sns.set()

train_df['char_length'].hist()

plt.show()

- Most of the text length are within 500 characters, with some up to 5,000 characters long.

2. Manually cleaning comment text

def clean_text(text):

text = text.lower()

text = re.sub(r"what's", "what is ", text)

text = re.sub(r"\'s", " ", text)

text = re.sub(r"\'ve", " have ", text)

text = re.sub(r"can't", "cannot ", text)

text = re.sub(r"n't", " not ", text)

text = re.sub(r"i'm", "i am ", text)

text = re.sub(r"\'re", " are ", text)

text = re.sub(r"\'d", " would ", text)

text = re.sub(r"\'ll", " will ", text)

text = re.sub(r"\'scuse", " excuse ", text)

text = re.sub('\W', ' ', text)

text = re.sub('\s+', ' ', text)

text = text.strip(' ')

return text

# clean the comment_text in train_df [Thanks to Pulkit Jha for the useful pointer.]

train_df['comment_text'] = train_df['comment_text'].map(lambda com : clean_text(com))

# clean the comment_text in test_df [Thanks, Pulkit Jha.]

test_df['comment_text'] = test_df['comment_text'].map(lambda com : clean_text(com))

3. Problem Transformation

- One way to approach a multi-label classification problem is to transform the problem into separate single-class classifier problems.

- This is known as 'problem transformation'. There are three methods:

- Binary Relevance. This is probably the simplest which treats each label as a separate single classification problems. The key assumption here though, is that there are no correlation among the various labels.

- Classifier Chains. In this method, the first classifier is trained on the input X. Then the subsequent classifiers are trained on the input X and all previous classifiers' predictions in the chain. This method attempts to draw the signals from the correlation among preceding target variables.

- Label Powerset. This method transforms the problem into a multi-class problem where the multi-class labels are essentially all the unique label combinations. In our case here, where there are six labels, Label Powerset would in effect turn this into a 2^6 or 64-class problem. {Thanks Joshua for pointing out.}

1) Binary Relevance

# import and instantiate the Logistic Regression model

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

logreg = LogisticRegression(C=12.0)

# create submission file

submission_binary = pd.read_csv('../input/sample_submission.csv')

for label in cols_target:

print('... Processing {}'.format(label))

y = train_df[label]

# train the model using X_dtm & y

logreg.fit(X_dtm, y)

# compute the training accuracy

y_pred_X = logreg.predict(X_dtm)

print('Training accuracy is {}'.format(accuracy_score(y, y_pred_X)))

# compute the predicted probabilities for X_test_dtm

test_y_prob = logreg.predict_proba(test_X_dtm)[:,1]

submission_binary[label] = test_y_prob2) Classifier Chains

# create submission file

submission_chains = pd.read_csv('../input/sample_submission.csv')

# create a function to add features

def add_feature(X, feature_to_add):

'''

Returns sparse feature matrix with added feature.

feature_to_add can also be a list of features.

'''

from scipy.sparse import csr_matrix, hstack

return hstack([X, csr_matrix(feature_to_add).T], 'csr')for label in cols_target:

print('... Processing {}'.format(label))

y = train_df[label]

# train the model using X_dtm & y

logreg.fit(X_dtm,y)

# compute the training accuracy

y_pred_X = logreg.predict(X_dtm)

print('Training Accuracy is {}'.format(accuracy_score(y,y_pred_X)))

# make predictions from test_X

test_y = logreg.predict(test_X_dtm)

test_y_prob = logreg.predict_proba(test_X_dtm)[:,1]

submission_chains[label] = test_y_prob

# chain current label to X_dtm

X_dtm = add_feature(X_dtm, y)

print('Shape of X_dtm is now {}'.format(X_dtm.shape))

# chain current label predictions to test_X_dtm

test_X_dtm = add_feature(test_X_dtm, test_y)

print('Shape of test_X_dtm is now {}'.format(test_X_dtm.shape))

The only way to fail is to never try. Take risks, learn from your mistakes, and keep pushing forward.

- Max Holloway -

'캐글' 카테고리의 다른 글

| [Kaggle Study] #13 Mercari Price Suggestion Challenge (0) | 2024.12.06 |

|---|---|

| [Kaggle Study] #15 2017 Kaggle Machine Learning & Data Science Survey (0) | 2024.12.05 |

| [Kaggle Study] #12 Spooky Author Identification (0) | 2024.12.04 |

| [Kaggle Study] #11 Credit Card Fraud Detection (1) | 2024.12.03 |

| [Kaggle Study] #10 Zillow Prize: Zillow’s Home Value Prediction (Zestimate) (0) | 2024.11.29 |