반응형

This post heavily relies on Andrew Ng's lecture:

컨볼루션 신경망

DeepLearning.AI에서 제공합니다. 딥러닝 전문 과정의 네 번째 과정에서는 컴퓨터 비전이 어떻게 발전해 왔는지 이해하고 자율주행, 얼굴 인식, 방사선 이미지 판독 등 흥미로운 응용 분야에 익숙해

www.coursera.org

Advantages of Convolutional Layers Over Fully Connected Layers

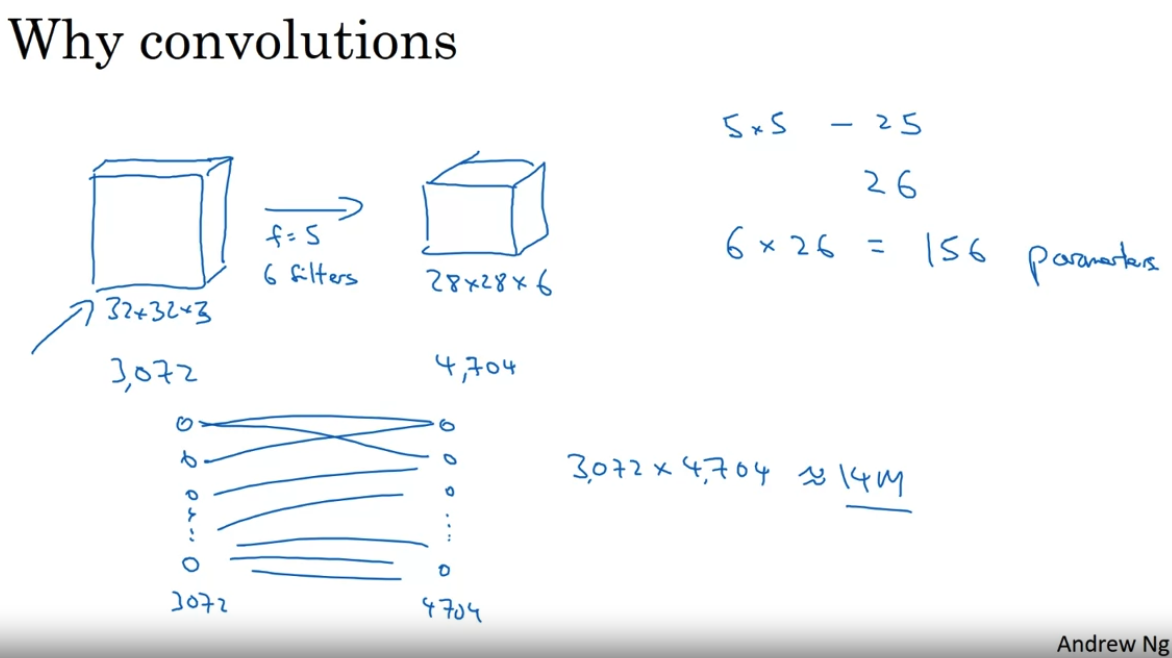

- Following above example, if we use Fully-connected layer instead of Convolutional layer, we should connect 3,072 and 4,704 neurons.

- That is, the number of parameters in the weight matrix would be 3,072 x 4,704, which is over 14 million.

- It is possible to train a model with 14 million parameters but given that the input image's size is not that big, it is extremely unefficient.

- When we use convolutional layers:

- f means size of the filter + we have 6 filters

- The number of parameters in the conv layer: [5 * 5 * 3(#channel) + 1(bias)] * 6(#filter) = 456

- The number of parameters remain quite small

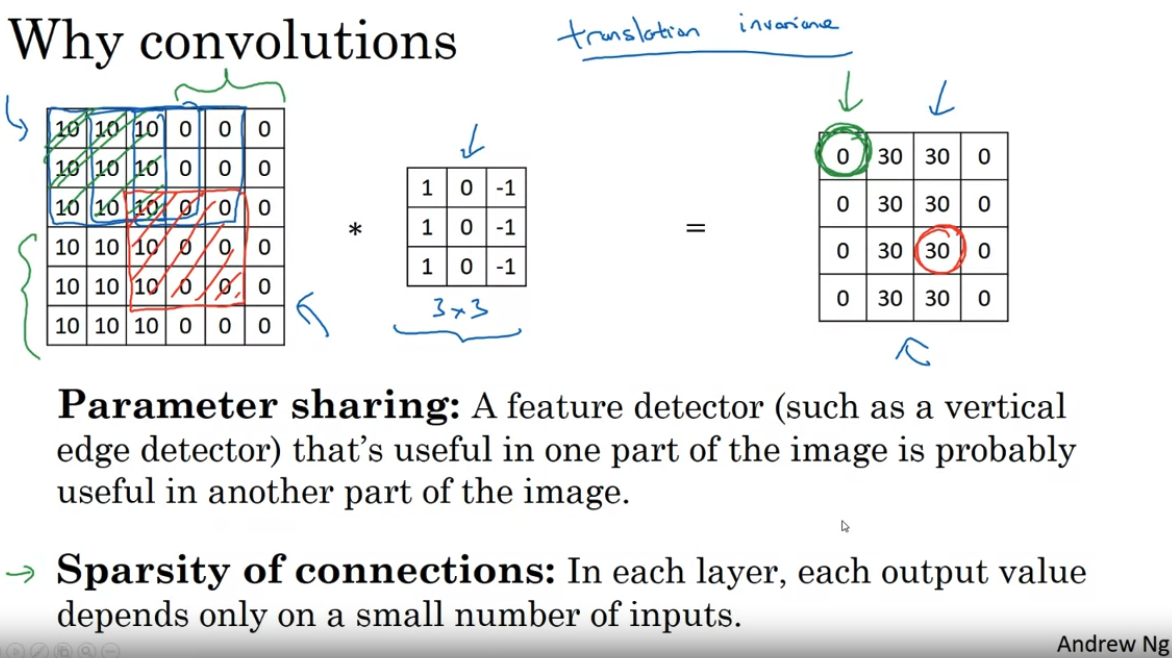

Reasons why convnet has relatively small parameters(= advantages of convnet):

- Parameter Sharing

- That is, we don't need to learn separate feature detector for each part of the image.

- Of course, there would be images that each part of the image might seem different.

- However, it is similar enough to share same feature detectors all across the image and work fine.

- Sparsity of Connections

- In this example, single value on the output depends only on 9 integers(pixels) on the input.

- Other pixels don't affect that output at all.

Translation Invariance

- These characteristics of CNN makes it very good at capturing Translation Invariance.

- For example, a picture of a cat shifted a couple of pixels to the right is still pretty clear a cat.

- Convolutional structure helps the neural network encode the fact that an image shifted a few pixels should result in pretty similar features and should probably be assigned the same label(target).

- The fact that we are applying the same filter to all the positions of the image both in the early layers and the later layers helps a neural network automatically learn to be more robust and to better capture the desirable property of translation invariance.

You can find more information about Translation Invariance from my another post:

[Kaggle Study] 16. Translation Invariance

Translation InvarianceTranslation invariance in CNN means that even if the input position changes, the output maintains the same value.Actually, CNN networks themselves have translation equivariance (variance).Meaning that, when computing with convolution

dongsunseng.com

Focus on progress, not perfection. Every step forward is a step in the right direction.

- Max Holloway -

반응형

'캐글' 카테고리의 다른 글

| [Kaggle Study] 17. ResNet Skip Connection (0) | 2024.11.22 |

|---|---|

| [Kaggle Study] 16. Translation Invariance (0) | 2024.11.21 |

| [Kaggle Study] #2 Porto Seguro's Safe Driver Prediction (1) | 2024.11.19 |

| [Kaggle Study] 14. Hyperparameter Tuning (1) | 2024.11.16 |

| [Kaggle Study] 13. Normalization 정규화 (0) | 2024.11.15 |