Fourth Competition following Yuhan Lee's curriculum. Binary classification competition using image data.

Statoil/C-CORE Iceberg Classifier Challenge

Ship or iceberg, can you decide from space?

www.kaggle.com

First Kernel: Keras Model for Beginners (0.210 on LB)+EDA+R&D

- EDA + basic modeling

- Using Keras which makes building deep neural networks very easy.

- Basic conv net model.

Insights/Summary:

1. Calculating backscatter coefficient

Formula: σo(dB) = βo(dB) + 10log10[sin(ip)/sin(ic)]

- Components:

- σo(dB)

- Final backscatter coefficient

- Expressed in decibels (dB)

- Value directly provided in the dataset

- βo(dB)

- Basic backscatter measurement

- Raw data before calibration

- ip (incidence pixel angle)

- Incidence angle(입사각) for a specific pixel

- The angle at which radar signals reach a specific point on Earth's surface

- ic (incidence center angle)

- Incidence angle at the center of the image

- Used as a reference point

- 10log10[sin(ip)/sin(ic)]

- Angle correction term

- Corrects for angle differences at different image locations

- Compensates for geometric distortion based on pixel location

- σo(dB)

- Purpose of this formula:

- Standardize raw backscatter data

- Correct distortions due to satellite observation angles

- Make data from different locations comparable

- Practical applications:

- Corrected backscatter coefficients are used for object identification

- Enables more accurate characterization of various objects like icebergs, ships, and land

- Contributes to improving data consistency and reliability

- The standardized data obtained through this formula can be used as input for machine learning models, enabling automated object classification.

2. HH & HV

- Sentinel Satellite's Radar Polarization System:

- Transmission(송신) Characteristics:

- Transmits signals only in H (Horizontal) polarization

- No V (Vertical) polarization transmitter

- Similar system to India's RISTSAT

- Reception(수신) Characteristics:

- H polarized signals that hit objects are reflected in two forms:

- HH: Horizontally transmitted signals reflected horizontally (H)

- HV: Horizontally transmitted signals reflected vertically (V)

- H polarized signals that hit objects are reflected in two forms:

- Why is there no VV?

- Because the satellite has no V (vertical) transmitter

- Therefore, VV or VH data cannot exist

- Transmission(송신) Characteristics:

- Data Processing Method:

- Extract data from two bands (HH, HV)

- Create a third channel by calculating the average of these two bands

- Results in creation of a 3-channel image similar to RGB

- This approach:

- Enables better understanding of object characteristics

- Allows application of conventional image processing techniques

- Facilitates visualization and analysis

- This becomes an important feature showing how objects reflect radar signals, enabling distinction between icebergs and ships.

#Generate the training data

#Create 3 bands having HH, HV and avg of both

X_band_1=np.array([np.array(band).astype(np.float32).reshape(75, 75) for band in train["band_1"]])

X_band_2=np.array([np.array(band).astype(np.float32).reshape(75, 75) for band in train["band_2"]])

X_train = np.concatenate([X_band_1[:, :, :, np.newaxis], X_band_2[:, :, :, np.newaxis],((X_band_1+X_band_2)/2)[:, :, :, np.newaxis]], axis=-1)

3. Defining callback functions

def get_callbacks(filepath, patience=2):

# Set up two callbacks

# 1. EarlyStopping callback

es = EarlyStopping(

'val_loss', # Metric to monitor

patience=patience, # Patience (how many epochs to wait without improvement)

mode="min" # Monitor for decreasing loss values

)

# 2. ModelCheckpoint callback

msave = ModelCheckpoint(

filepath, # Path to save the model

save_best_only=True # Save only the best performing model

)

return [es, msave]

# Callback usage setup

file_path = ".model_weights.hdf5" # Path to save model weights

callbacks = get_callbacks(filepath=file_path, patience=5) # Set patience to 5- EarlyStopping:

- Purpose: Prevent overfitting, save training time

- Operation:

- Monitors validation loss

- Stops training if there's no improvement for the specified number of patience epochs

- In this case, training stops if there's no improvement for 5 epochs

- ModelCheckpoint:

- Purpose: Save the optimal model

- Operation:

- Monitors model performance

- Only saves the weights of the model that shows the best performance

- Saves in .hdf5 file format

4. Conclusion

- "To increase the score, I have tried Speckle filtering, Indicence angle normalization and other preprocessing and they don't seems to work. You may try and see but for me they are not giving any good results."

- "You can't be on top-10 using this kernel, so here is one beautiful peice of information. The test dataset contain 8000 images, We can exploit this. We can do pseudo labelling to increase the predictions."

Pseudo Labeling?

- Pseudo Labeling, one of the semi-supervised learning techniques.

- How it works:

- First train the model with labeled data (training data)

- Use this model to make predictions on unlabeled data (test data)

- Use predictions with high confidence as if they were actual labels

- Combine this "pseudo-labeled" data with the original training data for retraining

# Example process

# 1. Initial model training

model.fit(X_train, y_train)

# 2. Predictions on test data

predictions = model.predict(X_test)

# 3. Select predictions with high confidence

confidence_threshold = 0.95

confident_predictions = predictions[predictions > confidence_threshold]

# 4. Add this data to training data

X_train_new = np.concatenate([X_train, X_test[confident_predictions]])

y_train_new = np.concatenate([y_train, predictions[confident_predictions]])

# 5. Retrain with new data

model.fit(X_train_new, y_train_new)- Advantages:

- Increases training data

- Improves model generalization performance

- Can incorporate test data characteristics into training

- Precautions:

- Incorrect predictions might be included in training data

- Setting confidence threshold is crucial

- Initial model performance is important

- In this case:

- 8000 test images exist

- Those predicted with high confidence are used as additional training data

- Can expect improvement in model performance

Second Kernel: Transfer Learning with VGG-16 CNN+AUG LB 0.1712

- Same author with first kernel.

- Transfer learning with VGG-16 architecture.

- Utilized inc_angle data in addition.

Insights/Summary:

1. VGG-16 architecture brief explanation

- Simple Structure:

- Uses 3x3 kernels in all convolutional layers

- Uses 2x2 max pooling in all pooling layers

- Repeats consistent patterns

- Depth:

- 16 layers (13 convolutional layers + 3 fully connected layers)

- Very deep structure for its time

- Characteristics:

- Uses ReLU activation function

- Prevents overfitting with dropout

- Reduces number of parameters with small filter sizes

- Advantages:

- Simple and effective architecture

- Good feature extraction capability

- Transferable to various tasks (transfer learning)

- Disadvantages:

- Large number of parameters (138M)

- High memory requirements

- Slow training speed

2. Using pretrained architecture: Transfer learning

- Hyperparameters in Deep learning are many, tuning them will take weeks or months. Generally researchers do this tuning and publish paper when they find a nice set of architecture which performs better than other.

- Since the model is pre-trained, it converges very fast and you but still you need GPU to use this. Due to some library issues, it doesn't work on CPU.

- For our purpose, we can use those architectures, which are made available by those researchers to us.

- Using those pretrained nets, layers of which already 'knows' how to extract features, we can don't have to tune the hyperparameters. Since they are already trained of some dataset(say imagenet), their pre-trained weights provide a good initialization of weights and because of this, our Convnet converges very fast which otherwise can take days on these deep architectures. That's the idea behind Transfer Learning. Examples of which are VGG16, InceptionNet, googlenet, Resnet etc.

- In this kernel we will use pretrained VGG-16 network which performs very well on small size images.

- VGG architecture has proved to worked well on small sized images(CIFAR-10) I expected it to work well for this dataset as well.

- The code also includes the data augmentation steps, thus considerably improving the performance.

- GPU is needed

- Keras provide the implementation of pretrained VGG, it in it's library so we don't have to build the net by ourselves.

- Here we are removing the last layer of VGG and putting our sigmoid layer for binary predictions.

- The following code will NOT WORK, since on kaggle notebook, the weights of model cannot be downloaded, however, you can copy paste the code in your own notebook to make it work.

3. Data prep

train = pd.read_json("../input/train.json")

target_train=train['is_iceberg']

test = pd.read_json("../input/test.json")

target_train=train['is_iceberg']

test['inc_angle']=pd.to_numeric(test['inc_angle'], errors='coerce')

train['inc_angle']=pd.to_numeric(train['inc_angle'], errors='coerce')#We have only 133 NAs.

train['inc_angle']=train['inc_angle'].fillna(method='pad')

X_angle=train['inc_angle']

test['inc_angle']=pd.to_numeric(test['inc_angle'], errors='coerce')

X_test_angle=test['inc_angle']

# Same with First Kernel below --------------

#Generate the training data

X_band_1=np.array([np.array(band).astype(np.float32).reshape(75, 75) for band in train["band_1"]])

X_band_2=np.array([np.array(band).astype(np.float32).reshape(75, 75) for band in train["band_2"]])

X_band_3=(X_band_1+X_band_2)/2

#X_band_3=np.array([np.full((75, 75), angel).astype(np.float32) for angel in train["inc_angle"]])

X_train = np.concatenate([X_band_1[:, :, :, np.newaxis]

, X_band_2[:, :, :, np.newaxis]

, X_band_3[:, :, :, np.newaxis]], axis=-1)

X_band_test_1=np.array([np.array(band).astype(np.float32).reshape(75, 75) for band in test["band_1"]])

X_band_test_2=np.array([np.array(band).astype(np.float32).reshape(75, 75) for band in test["band_2"]])

X_band_test_3=(X_band_test_1+X_band_test_2)/2

#X_band_test_3=np.array([np.full((75, 75), angel).astype(np.float32) for angel in test["inc_angle"]])

X_test = np.concatenate([X_band_test_1[:, :, :, np.newaxis]

, X_band_test_2[:, :, :, np.newaxis]

, X_band_test_3[:, :, :, np.newaxis]], axis=-1)

- In the second kernel, unlike the first one, the 'inc_angle' column from the given data was utilized. Here's how it was processed:

- pd.to_numeric with errors='coerce' option:

- This converts values to numeric format

- The 'coerce' option converts non-convertible values to NaN (Not a Number)

- fillna(method='pad'):

- This fills the NaN values that were just created

- Uses forward filling method, which fills NaN values with the last valid value that appeared before them

- Also known as 'forward fill' or 'propagate' method

- pd.to_numeric with errors='coerce' option:

4. Data Augmentation(You can check my previous post with details about Data Augmentation: https://dongsunseng.com/entry/Kaggle-Study-11-Data-Augmentation)

#Data Aug for multi-input

from keras.preprocessing.image import ImageDataGenerator

batch_size=64

# Define the image transformations here

gen = ImageDataGenerator(horizontal_flip = True,

vertical_flip = True,

width_shift_range = 0.,

height_shift_range = 0.,

channel_shift_range=0,

zoom_range = 0.2,

rotation_range = 10)- "multi-input" meaning Image data(X1) & Angle data(X2)

- Image generator options:

- horizontal_flip = True -> Horizontal flipping

- vertical_flip = True -> Vertical flipping

- width_shift_range = 0. -> Width shift range

- height_shift_range = 0. -> Height shift range

- channel_shift_range = 0 -> Channel shift range

- zoom_range = 0.2 -> Zoom range (20% zoom in/out)

- rotation_range = 10 -> Rotation range (±10 degrees)

# Here is the function that merges our two generators

# We use the exact same generator with the same random seed for both the y and angle arrays

def gen_flow_for_two_inputs(X1, X2, y):

genX1 = gen.flow(X1,y, batch_size=batch_size,seed=55)

genX2 = gen.flow(X1,X2, batch_size=batch_size,seed=55)

while True:

X1i = genX1.next()

X2i = genX2.next()

#Assert arrays are equal - this was for peace of mind, but slows down training

#np.testing.assert_array_equal(X1i[0],X2i[0])

yield [X1i[0], X2i[1]], X1i[1]- X1: Image data & X2: Angle data & y: Label/Target data

- First Generator genX1: images and labels

- Second Generator genX2: images and angle data

- X1i[0]: augmented image & X1i[1]: label & X2i[1]: angle data

- Return format: ([augmented image, angle data], label)

- Ensures images and angle data remain paired by synchronizing generators with while loop

5. Modeling

def getVggAngleModel():

# 1. Configure layers for angle input

input_2 = Input(shape=[1], name="angle") # Angle data input layer

angle_layer = Dense(1)(input_2) # Angle data processing layer

# 2. Load VGG16 base model

base_model = VGG16(

weights='imagenet', # Use ImageNet pre-trained weights

include_top=False, # Exclude VGG16's existing fully connected layers

input_shape=X_train.shape[1:], # Set input image size

classes=1 # Number of classes (binary classification)

)

# 3. Get output from specific VGG16 layer (block5_pool)

x = base_model.get_layer('block5_pool').output

# 4. Apply Global Max Pooling

x = GlobalMaxPooling2D()(x)

# 5. Combine image features and angle data

merge_one = concatenate([x, angle_layer])

# 6. Add new fully connected layers

merge_one = Dense(512, activation='relu', name='fc2')(merge_one)

merge_one = Dropout(0.3)(merge_one) # Prevent overfitting

merge_one = Dense(512, activation='relu', name='fc3')(merge_one)

merge_one = Dropout(0.3)(merge_one)

# 7. Output layer (binary classification)

predictions = Dense(1, activation='sigmoid')(merge_one)

# 8. Final model configuration

model = Model(

input=[base_model.input, input_2], # Two inputs

output=predictions # One output

)- How did we modified from original VGG16 architecture:

- Added new structure instead of VGG16's existing fully connected layers

- Got the output of specific VGG16 layer -> applied global max pooling for dimensionality -> combined image features and angle data

- Modified final layer for binary classification

- predictions = Dense(1, activation='sigmoid')(merge_one)

- Added new structure instead of VGG16's existing fully connected layers

- Why global max pooling?

- x = GlobalMaxPooling2D()(x) # Convert feature maps to 1D

- Basic purpose: Dimension Reduction

- VGG16's block5_pool output is a 3D feature map (height × width × channels)

- Global Max Pooling converts this into a 1D vector

- Creates feature vector by extracting maximum value from each channel

- # VGG16's feature map

x = base_model.get_layer('block5_pool').output # (h, w, 512)

# Apply Global Max Pooling

x = GlobalMaxPooling2D()(x) # (512)

# Transform into a form that's easy to combine with angle data

merge_one = concatenate([x, angle_layer])

Third Kernel: Submarineering.EVEN BETTER PUBLIC SCORE until now.

- This is a kernel that stacks solutions by borrowing results from both their own and others' solutions.

Insights/Summary:

1. Overall Summary

- Ensembles 4 different prediction results to make the final prediction.

- 4 solutions:

- https://www.kaggle.com/code/solomonk/pytorch-cnn-densenet-ensemble-lb-0-1538

- https://www.kaggle.com/code/wvadim/keras-tf-lb-0-18

- This is the Fourth kernel we will talk about below.

- https://www.kaggle.com/datasets/submarineering/submission38-lb01448

- submission38.csv and submission43.csv both belong to this kernel.

More details about the solutions used for stacking:

1. https://www.kaggle.com/code/solomonk/pytorch-cnn-densenet-ensemble-lb-0-1538

- Uses DenseNet architecture

- Does not use angle data

- Feature extraction through Bottleneck blocks

- Adjusts network size using Growth rate and reduction parameters

- Simple train/validation split rather than k-fold

- Uses Adam optimizer

- Applies L2 regularization

2. https://www.kaggle.com/datasets/submarineering/submission38-lb01448

submission 38 and submission 43 are all results from similar ensemble prediction made by the same author as far as I understood.

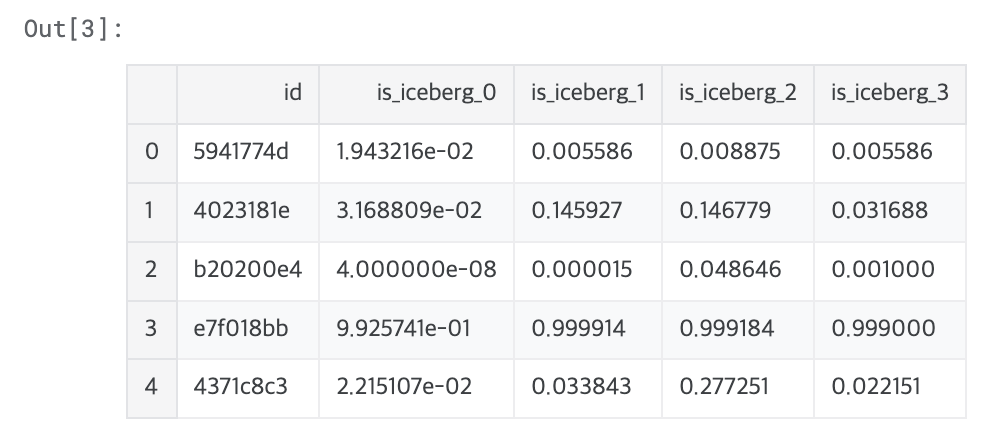

# Read and concatenate submissions

out1 = pd.read_csv("../input/statoil-iceberg-submissions/sub_200_ens_densenet.csv", index_col=0)

out2 = pd.read_csv("../input/statoil-iceberg-submissions/sub_TF_keras.csv", index_col=0)

out3 = pd.read_csv("../input/submission38-lb01448/submission38.csv", index_col=0)

out4 = pd.read_csv("../input/submission38-lb01448/submission43.csv", index_col=0)

concat_sub = pd.concat([out1, out2, out3, out4], axis=1)

cols = list(map(lambda x: "is_iceberg_" + str(x), range(len(concat_sub.columns))))

concat_sub.columns = cols

concat_sub.reset_index(inplace=True)

concat_sub.head()- Loads prediction results (.csv files) from multiple participants

- Uses pd.concat() to combine predictions from 4 models into a single dataframe

- Changes each model's prediction column name to 'is_iceberg_0', 'is_iceberg_1', etc.

- Calculating basic statistics:

concat_sub['is_iceberg_max'] = concat_sub.iloc[:, 1:6].max(axis=1) # Maximum value

concat_sub['is_iceberg_min'] = concat_sub.iloc[:, 1:6].min(axis=1) # Minimum value

concat_sub['is_iceberg_mean'] = concat_sub.iloc[:, 1:6].mean(axis=1) # Average

concat_sub['is_iceberg_median'] = concat_sub.iloc[:, 1:6].median(axis=1) # Median value

2. Various stacking techniques:

1) Mean Stacking

concat_sub['is_iceberg'] = concat_sub['is_iceberg_mean']

concat_sub[['id', 'is_iceberg']].to_csv('stack_mean.csv', index=False, float_format='%.6f')

- Simply uses the average of all model predictions

- LB score: 0.1698

2) Median Stacking

concat_sub['is_iceberg'] = concat_sub['is_iceberg_median']

concat_sub[['id', 'is_iceberg']].to_csv('stack_median.csv', index=False, float_format='%.6f')

- Uses the median value of all model predictions

- LB score: 0.1575

3) PushOut + Median Stacking

# set up cutoff threshold for lower and upper bounds, easy to twist

cutoff_lo = 0.8

cutoff_hi = 0.2

concat_sub['is_iceberg'] = np.where(np.all(concat_sub.iloc[:,1:6] > cutoff_lo, axis=1), 1,

np.where(np.all(concat_sub.iloc[:,1:6] < cutoff_hi, axis=1),

0, concat_sub['is_iceberg_median']))

concat_sub[['id', 'is_iceberg']].to_csv('stack_pushout_median.csv',

index=False, float_format='%.6f')

- If all models show high confidence (>0.8), set to 1

- If all models show low confidence (<0.2), set to 0

- Otherwise, use median value

- LB score: 0.1940

4) MinMax + Mean Stacking

concat_sub['is_iceberg'] = np.where(np.all(concat_sub.iloc[:,1:6] > cutoff_lo, axis=1),

concat_sub['is_iceberg_max'], # Maximum value when high confidence

np.where(np.all(concat_sub.iloc[:,1:6] < cutoff_hi, axis=1),

concat_sub['is_iceberg_min'], # Minimum value when low confidence

concat_sub['is_iceberg_mean'])) # Mean value otherwise

- If all models show high confidence (>0.8), set to max value

- If all models show low confidence (<0.2), set to min value

- Otherwise, use mean value

- LB score: 0.1622

5) MinMax + Median Stacking

concat_sub['is_iceberg'] = np.where(np.all(concat_sub.iloc[:,1:6] > cutoff_lo, axis=1),

concat_sub['is_iceberg_max'], # Maximum value when high confidence

np.where(np.all(concat_sub.iloc[:,1:6] < cutoff_hi, axis=1),

concat_sub['is_iceberg_min'], # Minimum value when low confidence

concat_sub['is_iceberg_median'])) # Median value otherwise- If all models show high confidence (>0.8), set to max value

- If all models show low confidence (<0.2), set to min value

- Otherwise, use median value

- LB score: 0.1488

6) MinMax + BestBase Stacking

# load the model with best base performance

sub_base = pd.read_csv('../input/submission38-lb01448/submission43.csv')

concat_sub['is_iceberg_base'] = sub_base['is_iceberg']

concat_sub['is_iceberg'] = np.where(np.all(concat_sub.iloc[:,1:4] > cutoff_lo, axis=1),

concat_sub['is_iceberg_max'],

np.where(np.all(concat_sub.iloc[:,1:4] < cutoff_hi, axis=1),

concat_sub['is_iceberg_min'],

concat_sub['is_iceberg_base']))

concat_sub['is_iceberg'] = np.clip(concat_sub['is_iceberg'].values, 0.001, 0.999)

concat_sub[['id', 'is_iceberg']].to_csv('submission54.csv',

index=False, float_format='%.6f')- Uses the best performing single model (submission43.csv) as the baseline

- Applies MinMax strategy to adjust to max/min values only when confidence is high or low

- Clips prediction values between 0.001~0.999 to prevent extreme values

Fourth Kernel: Keras+TF LB 0.18

- Data preprocessing + modeling kernel with decent LB.

Insights/Summary:

1. Basic idea

- Images in the dataset are very noisy

- If we remove granular noise, we can make better predictions

- We can construct our own noisy dataset

- It might be interesting to train a denoising autoencoder on the dataset to:

- Extract global features

- Use these features for further model training

2. Details

# Translate data to an image format

def color_composite(data):

# data: DataFrame containing band_1 and band_2 data

rgb_arrays = []

for i, row in data.iterrows():

# 1. Reshape original data

band_1 = np.array(row['band_1']).reshape(75, 75) # HH polarization

band_2 = np.array(row['band_2']).reshape(75, 75) # HV polarization

band_3 = band_1 / band_2 # Create new feature

# Normalization Process:

r = (band_1 + abs(band_1.min())) / np.max((band_1 + abs(band_1.min())))

g = (band_2 + abs(band_2.min())) / np.max((band_2 + abs(band_2.min())))

b = (band_3 + abs(band_3.min())) / np.max((band_3 + abs(band_3.min())))

rgb = np.dstack((r, g, b)) # Combine 3 channels into one RGB image

rgb_arrays.append(rgb) # Add to result list

return np.array(rgb_arrays) # Array of shape (n_samples, 75, 75, 3)

def denoise(X, weight, multichannel):

# Uses TV(Total Variation) Chambolle denoising algorithm

return np.asarray([

denoise_tv_chambolle(

item,

weight=weight, # Denoising strength

multichannel=multichannel # RGB vs grayscale

)

for item in X

])

def smooth(X, sigma):

# Apply Gaussian blur

return np.asarray([

gaussian(

item,

sigma=sigma # Blur standard deviation

)

for item in X

])

def grayscale(X):

# Convert RGB images to grayscale

return np.asarray([

rgb2gray(item)

for item in X

])- color_composite method: converts radar data band_1 and band_2 to RGB images

- Normalization Process Explanation:

- Handle negative values: + abs(min()) converts all values to positive

- Scaling: / np.max() scales all values to 0~1 range

- Normalization Process Explanation:

- denoise method:

- Purpose: Remove noise from images

- weight: Denoising strength (higher = smoother)

- multichannel: True for RGB images, False for grayscale

- TV Chambolle: Removes noise while preserving edges

- smooth method:

- Purpose: Make images smoother

- sigma: Blur intensity (higher = blurrier)

- Image smoothing using Gaussian filter

- grayscale method:

- Purpose: Convert RGB images to black and white

- Dimension reduction effect (3 channels → 1 channel)

train = pd.read_json("../input/train.json")

train.inc_angle = train.inc_angle.replace('na', 0)

train.inc_angle = train.inc_angle.astype(float).fillna(0.0)

train_all = True

# These are train flags that required to train model more efficiently and

# select proper model parameters

train_b = True or train_all

train_img = True or train_all

train_total = True or train_all

predict_submission = True and train_all

clean_all = False

clean_b = False or clean_all

clean_img = False or clean_all

load_all = False

load_b = False or load_all

load_img = False or load_all- Setting flags using logical operators

- True or train_all: Always True if train_all is True

- False or clean_all: Always False if clean_all is False

- Modular execution control

- Can activate/deactivate each step independently

- Useful for experimentation and debugging

# Convert nested array to numpy array

to_arr = lambda x: np.asarray([np.asarray(item) for item in x])

# Reshape 1D vector to 75x75 image

gray_reshape = lambda x: np.asarray([item.reshape(75, 75) for item in x])

# Add channel dimension to 75x75 image (75x75x1)

tf_reshape = lambda x: np.asarray([item.reshape(75, 75, 1) for item in x])- Above are the data shape transformation methods defined.

Summary until now(including create_dataset method):

1. Original Data Format:

train['band_1']: 1D array with 5625 values (75x75=5625)

train['band_2']: 1D array with 5625 values

2. First Transformation - Convert to Image Format:

# 1D -> 2D(75x75) conversion

band_1 = gray_reshape(band_1) # (75, 75) shape

band_2 = gray_reshape(band_2) # (75, 75) shape

band_3 = (band_1 + band_2) / 2 # Average value

3. Denoising Process (if train_b and clean_b):

# For grayscale images

band_1 = denoise(band_1, weight=weight_gray, multichannel=False)

# 1. Denoise using TV Chambolle algorithm

# 2. Remove noise while preserving edges

4. Smoothing Process:

band_1 = smooth(band_1, sigma=smooth_gray)

# 1. Apply Gaussian blur

# 2. Make image smoother

# 3. Remove additional remaining noise

Complete Pipeline Example:

# Original data

[1, 2, 3, ..., 5625] (1D array)

↓

# Convert to image format

[[1, 2, 3],

[4, 5, 6],

[7, 8, 9]] (75x75 matrix)

↓

# Denoise

Apply denoise_tv_chambolle

- Reduce sudden changes

- Preserve edges

↓

# Smoothing

Apply gaussian filter

- Smooth changes

- Overall blurring

Two Data Processing Paths:

1. Band Data Processing (X_b):

Original band_1, band_2

→ Convert to 75x75

→ Denoise

→ Smooth

→ Combine 3 channels (band_1, band_2, average)

2. RGB Image Processing (X_images):

Original band_1, band_2

→ color_composite conversion (RGB)

→ Denoise (multichannel=True)

→ Smooth- Purpose of Processing:

- Improve signal quality

- Remove unnecessary noise

- Preserve important features

- Convert to suitable format for model training

- RGB Images (X_images):

- R, G, B channels represent color information

- Visually meaningful color representation

- Band Data (X_b):

- Stores 3 different features in separate channels

- Each channel contains independent grayscale information

- Looks black and white when visualized, but actually contains 3 different feature information

- Results:

- X_b: Processed band data in shape (n_samples, 75, 75, 3)

- X_images: Processed RGB images in shape (n_samples, 75, 75, 3)

- This dual processing is meant to utilize different characteristics of the data.

3. Modeling

- The model itself consists of 3 convolutional neural networks.

- Two basic networks and one combined.

- The idea is to train two basic networks on different data representations and after that, using trained convolutional layers in combination to train common network.

- For training i'm using 3 datasets - train, val, test, 1 that network sees only once(test) and default keras val_split method for model selection.

def get_model_notebook(lr, decay, channels, relu_type='relu'):

"""

Function to create CNN model

Args:

lr: Learning rate

decay: Learning rate decay

channels: Number of input image channels

relu_type: Activation function type (default: 'relu')

Returns:

model: Complete CNN model

partial_model: Partial model for feature extraction (excluding fully connected layers)

"""

# Define input layer - 75x75 size image

input_1 = Input(shape=(75, 75, channels))

# CNN structure for feature extraction

# First Conv block - 32 filters

fcnn = Conv2D(32, kernel_size=(3, 3), activation=relu_type)(

BatchNormalization()(input_1)) # Batch normalization before convolution

fcnn = MaxPooling2D((3, 3))(fcnn) # 3x3 max pooling to reduce size

fcnn = Dropout(0.2)(fcnn) # 20% dropout to prevent overfitting

# Second Conv block - 64 filters

fcnn = Conv2D(64, kernel_size=(3, 3), activation=relu_type)(fcnn)

fcnn = MaxPooling2D((2, 2), strides=(2, 2))(fcnn) # 2x2 max pooling, stride 2

fcnn = Dropout(0.2)(fcnn)

# Third Conv block - 128 filters

fcnn = Conv2D(128, kernel_size=(3, 3), activation=relu_type)(fcnn)

fcnn = MaxPooling2D((2, 2), strides=(2, 2))(fcnn)

fcnn = Dropout(0.2)(fcnn)

# Fourth Conv block - 128 filters

fcnn = Conv2D(128, kernel_size=(3, 3), activation=relu_type)(fcnn)

fcnn = MaxPooling2D((2, 2), strides=(2, 2))(fcnn)

fcnn = Dropout(0.2)(fcnn)

# Final feature map normalization

fcnn = BatchNormalization()(fcnn)

fcnn = Flatten()(fcnn) # Flatten to 1D

# Create partial model for feature extraction

local_input = input_1

partial_model = Model(input_1, fcnn)

# Fully connected layer structure

# Gradual reduction from 256 -> 128 -> 64 units

dense = Dropout(0.2)(fcnn)

dense = Dense(256, activation=relu_type)(dense)

dense = Dropout(0.2)(dense)

dense = Dense(128, activation=relu_type)(dense)

dense = Dropout(0.2)(dense)

dense = Dense(64, activation=relu_type)(dense)

dense = Dropout(0.2)(dense)

# Output layer for binary classification

output = Dense(1, activation="sigmoid")(dense)

# Create complete model

model = Model(local_input, output)

# Configure Adam optimizer

optimizer = Adam(lr=lr, decay=decay)

# Compile model - settings for binary classification

model.compile(loss="binary_crossentropy",

optimizer=optimizer,

metrics=["accuracy"])

return model, partial_modeldef combined_model(m_b, m_img, lr, decay):

"""

Function to combine two models (bandwidth and image models) into a new model

Args:

m_b: bandwidth model (feature extractor)

m_img: image model (feature extractor)

lr: learning rate

decay: learning rate decay

Returns:

model: combined new model

"""

# Define two input layers (75x75x3 size)

input_b = Input(shape=(75, 75, 3)) # Input for bandwidth data

input_img = Input(shape=(75, 75, 3)) # Input for image data

# Comments about setting model trainability

# Currently both models remain trainable

# Can freeze each model using code below

#for layer in m_b.layers:

# layer.trainable = False

#for layer in m_img.layers:

# layer.trainable = False

# Extract features by passing input data to each model

m1 = m_b(input_b) # bandwidth model features

m2 = m_img(input_img) # image model features

# Combine features from both models to create new classifier

# Note: Considered using XGBoost but not preferred

common = Concatenate()([m1, m2]) # Concatenate feature vectors

common = BatchNormalization()(common) # Normalize combined features

common = Dropout(0.3)(common) # 30% dropout

# First Dense layer - 1024 units

common = Dense(1024, activation='relu')(common)

common = Dropout(0.3)(common)

# Second Dense layer - 512 units

common = Dense(512, activation='relu')(common)

common = Dropout(0.3)(common)

# Output layer - sigmoid activation for binary classification

output = Dense(1, activation="sigmoid")(common)

# Create final model - two inputs and one output

model = Model([input_b, input_img], output)

# Configure Adam optimizer

# beta_1, beta_2: momentum-related parameters

# epsilon: small constant for numerical stability

optimizer = Adam(lr=lr, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=decay)

# Compile model

model.compile(loss="binary_crossentropy", # Loss function for binary classification

optimizer=optimizer, # Configured optimizer

metrics=["accuracy"]) # Evaluation metrics

return modeldef gen_flow_multi_inputs(I1, I2, y, batch_size):

"""

Function to create data augmentation generator for two input images

Args:

I1: First input image array (bandwidth data)

I2: Second input image array (image data)

y: Label data

batch_size: Batch size

Yields:

([augmented_I1, augmented_I2], augmented_y): Augmented image pairs and corresponding labels

"""

# Configure first data augmenter

gen1 = ImageDataGenerator(

horizontal_flip=True, # Allow horizontal flip

vertical_flip=True, # Allow vertical flip

width_shift_range=0., # Disable horizontal shift

height_shift_range=0., # Disable vertical shift

channel_shift_range=0, # Disable channel shift

zoom_range=0.2, # Zoom in/out within 20% range

rotation_range=10 # Rotate within ±10 degrees

)

# Configure second data augmenter (same settings as first)

gen2 = ImageDataGenerator(

horizontal_flip=True,

vertical_flip=True,

width_shift_range=0.,

height_shift_range=0.,

channel_shift_range=0,

zoom_range=0.2,

rotation_range=10

)

# First generator: connect I1 images with labels y

genI1 = gen1.flow(

I1, y,

batch_size=batch_size,

seed=57, # Set seed for reproducibility

shuffle=False # Maintain data order

)

# Second generator: connect I1 images with I2 images

genI2 = gen2.flow(

I1, I2,

batch_size=batch_size,

seed=57, # Same seed as first generator

shuffle=False # Maintain data order

)

# Generate batch data in infinite loop

while True:

# Get next batch from each generator

I1i = genI1.next()

I2i = genI2.next()

# Verify that I1 images from both generators are identical (validation)

np.testing.assert_array_equal(I2i[0], I1i[0])

# Return augmented image pairs and labels

# I1i[0]: Augmented first image

# I2i[1]: Augmented second image

# I1i[1]: Corresponding labels

yield [I1i[0], I2i[1]], I1i[1]def train_model(model, batch_size, epochs, checkpoint_name, X_train, y_train, val_data, verbose=2):

"""

Function to train model and save the best model

Args:

model: Model to train

batch_size: Batch size

epochs: Number of training epochs

checkpoint_name: Model save filename

X_train: Training data

y_train: Training data labels

val_data: Validation data tuple in form of (x_test, y_test)

verbose: Verbosity level (default: 2)

Returns:

model: Trained model (with best weights loaded)

"""

# Configure checkpoint callback

# save_best_only=True: Save only the best model

# monitor='val_loss': Select model based on validation loss

callbacks = [ModelCheckpoint(checkpoint_name,

save_best_only=True,

monitor='val_loss')]

# Configure data augmenter

datagen = ImageDataGenerator(

horizontal_flip=True, # Horizontal flip

vertical_flip=True, # Vertical flip

width_shift_range=0., # Disable horizontal shift

height_shift_range=0., # Disable vertical shift

channel_shift_range=0, # Disable channel shift

zoom_range=0.2, # Zoom in/out within 20% range

rotation_range=10 # Rotate within ±10 degrees

)

# Unpack validation data

x_test, y_test = val_data

try:

# Train model with data augmentation

model.fit_generator(

# Generator for augmented data

datagen.flow(X_train, y_train, batch_size=batch_size),

# Training settings

epochs=epochs,

# Calculate batches per epoch

steps_per_epoch=len(X_train) / batch_size,

# Set validation data (no augmentation)

validation_data=(x_test, y_test),

verbose=1,

callbacks=callbacks

)

except KeyboardInterrupt:

# Handle training interruption

if verbose > 0:

print('Interrupted')

# Load best model weights

if verbose > 0:

print('Loading model')

model.load_weights(filepath=checkpoint_name)

return modeldef gen_model_weights(lr, decay, channels, relu, batch_size, epochs, path_name, data, verbose=2):

"""

Integrated function to create and train model

Args:

lr: Learning rate

decay: Learning rate decay

channels: Number of input image channels

relu: Activation function type

batch_size: Batch size

epochs: Number of training epochs

path_name: Model save path

data: Data tuple in form of (X_train, y_train, X_test, y_test, X_val, y_val)

verbose: Verbosity level (default: 2)

Returns:

model: Trained complete model

partial_model: Partial model for feature extraction

"""

# Unpack input data

X_train, y_train, X_test, y_test, X_val, y_val = data

# Create model

# Generate complete and partial models through get_model_notebook function

model, partial_model = get_model_notebook(lr, decay, channels, relu)

# Train model

# Perform model training using train_model function

# Use X_test, y_test as validation data

model = train_model(model,

batch_size=batch_size,

epochs=epochs,

checkpoint_name=path_name,

X_train=X_train,

y_train=y_train,

val_data=(X_test, y_test),

verbose=verbose)

# Perform performance evaluation only when verbose > 0

if verbose > 0:

# Evaluate performance on validation set

loss_val, acc_val = model.evaluate(X_val, y_val,

verbose=0,

batch_size=batch_size)

# Evaluate performance on training set

loss_train, acc_train = model.evaluate(X_test, y_test,

verbose=0,

batch_size=batch_size)

# Print performance metrics

print('Val/Train Loss:', str(loss_val) + '/' + str(loss_train), \

'Val/Train Acc:', str(acc_val) + '/' + str(acc_train))

return model, partial_modeldef train_models(dataset, lr, batch_size, max_epoch, verbose=2, return_model=False):

"""

Function to train 3 models (bandwidth network, image network, combined network)

Args:

dataset: Tuple of (y_train, X_b, X_images). y_train is labels, X_b is bandwidth data, X_images is image data

lr: Learning rate

batch_size: Batch size

max_epoch: Maximum number of epochs

verbose: Verbosity level (0: no output, 1: progress bar, 2: one line per epoch)

return_model: If True returns trained model, if False returns performance metrics

"""

# Unpack input dataset

y_train, X_b, X_images = dataset

# First data split: Split all data into training(90%) and validation(10%)

# _full: for training, _val: for validation

y_train_full, y_val,\

X_b_full, X_b_val,\

X_images_full, X_images_val = train_test_split(y_train, X_b, X_images,

random_state=687, train_size=0.9)

# Second data split: Split training data into training(85%) and test(15%)

y_train, y_test, \

X_b_train, X_b_test, \

X_images_train, X_images_test = train_test_split(y_train_full, X_b_full, X_images_full,

random_state=576, train_size=0.85)

# Train Bandwidth network

if train_b:

if verbose > 0:

print('Training bandwidth network')

# Prepare bandwidth data

data_b1 = (X_b_train, y_train, X_b_test, y_test, X_b_val, y_val)

# Create and train bandwidth model

# model_b: complete model, model_b_cut: feature extraction part

model_b, model_b_cut = gen_model_weights(lr, 1e-6, 3, 'relu', batch_size, max_epoch, 'model_b',

data=data_b1, verbose=verbose)

# Train Image network

if train_img:

if verbose > 0:

print('Training image network')

# Prepare image data

data_images = (X_images_train, y_train, X_images_test, y_test, X_images_val, y_val)

# Create and train image model

# model_images: complete model, model_images_cut: feature extraction part

model_images, model_images_cut = gen_model_weights(lr, 1e-6, 3, 'relu', batch_size, max_epoch, 'model_img',

data=data_images, verbose=verbose)

# Train combined network of two networks

if train_total:

# Create new model by combining bandwidth and image models

# Learning rate set to half of individual models

common_model = combined_model(model_b_cut, model_images_cut, lr/2, 1e-7)

# Prepare training and validation data

common_x_train = [X_b_full, X_images_full]

common_y_train = y_train_full

common_x_val = [X_b_val, X_images_val]

common_y_val = y_val

if verbose > 0:

print('Training common network')

# Set checkpoint callback - save best model

callbacks = [ModelCheckpoint('common', save_best_only=True, monitor='val_loss')]

try:

# Train model with data augmentation

common_model.fit_generator(gen_flow_multi_inputs(X_b_full, X_images_full, y_train_full, batch_size),

epochs=30,

steps_per_epoch=len(X_b_full) / batch_size,

validation_data=(common_x_val, common_y_val),

verbose=1,

callbacks=callbacks)

except KeyboardInterrupt:

# Load best model when training interrupted

pass

# Load weights of best saved model

common_model.load_weights(filepath='common')

# Evaluate performance on validation data

loss_val, acc_val = common_model.evaluate(common_x_val, common_y_val,

verbose=0, batch_size=batch_size)

# Evaluate performance on training data

loss_train, acc_train = common_model.evaluate(common_x_train, common_y_train,

verbose=0, batch_size=batch_size)

if verbose > 0:

print('Loss:', loss_val, 'Acc:', acc_val)

# Set return value

if return_model:

return common_model # Return trained model

else:

return (loss_train, acc_train), (loss_val, acc_val) # Return performance metrics# Best parameters i got are

# epochs : 250

# learning rate : 8e-5

# batch size : 32

common_model = train_models((y_train, X_b, X_images), 7e-04, 32, 50, 1, return_model=True)

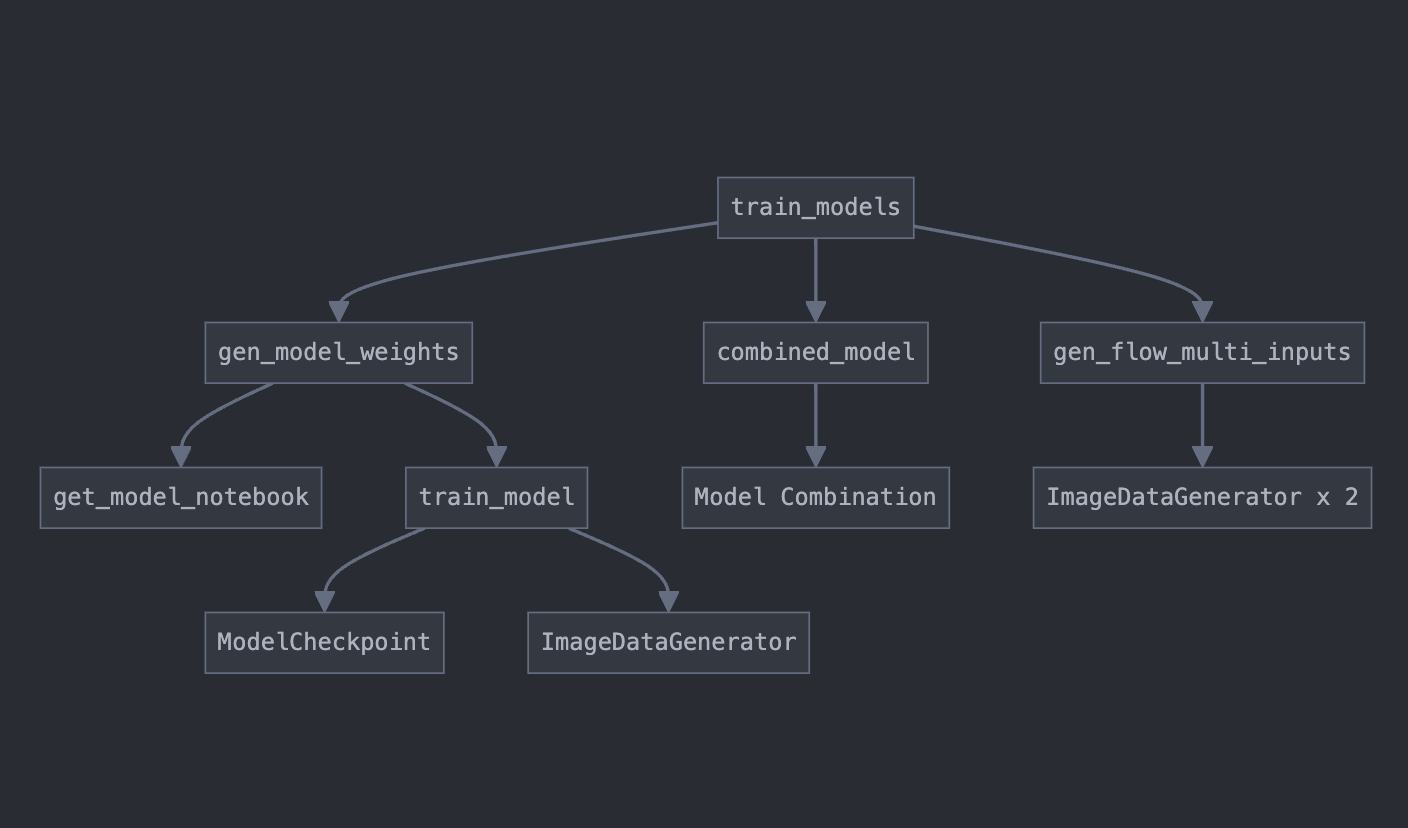

Summary:

- train_models: Manages the entire training process

- gen_model_weights: Manages single model creation and training

- get_model_notebook: Creates CNN model architecture

- train_model: Performs actual model training

- combined_model: Creates new model by combining two models

- gen_flow_multi_inputs: Generates data augmentation for two inputs

train_models

├── Input: dataset(y_train, X_b, X_images), lr, batch_size, max_epoch

├── Data split(using train_test_split twice)

│ ├── First split: train_full and validation

│ └── Second split: train and test

│

├── Bandwidth model training (when train_b=True)

│ └── Call gen_model_weights

│ ├── Call get_model_notebook: Create model architecture

│ └── Call train_model: Train model

│

├── Image model training (when train_img=True)

│ └── Call gen_model_weights

│ ├── Call get_model_notebook: Create model architecture

│ └── Call train_model: Train model

│

└── Combined model training (when train_total=True)

├── Call combined_model: Combine two models

└── Use gen_flow_multi_inputs: Data augmentation

Failure is not the end, but rather an opportunity for growth and improvement.

- Max Holloway -

'캐글' 카테고리의 다른 글

| [Kaggle Study] #7 TensorFlow Speech Recognition Challenge (0) | 2024.11.27 |

|---|---|

| [Kaggle Study] #4 More about Home Credit Default Risk Competition (0) | 2024.11.26 |

| [Kaggle Study] #3 Home Credit Default Risk (1) | 2024.11.24 |

| [Kaggle Study] 17. ResNet Skip Connection (0) | 2024.11.22 |

| [Kaggle Study] 16. Translation Invariance (0) | 2024.11.21 |